10 Popular Linguistic Experiment Examples in Labvanced

Language and speech researchers use online experiment platforms like Labvanced for running their various studies because it’s a way to gather both participants and data quickly.

By running experiments in a virtual language lab, publishing studies online and sharing them through the web, linguists and cognitive psychologists not only complete their research faster but also create their experiment quickly and without code.

Below we highlight 10 popular linguistic experiments that can be performed in Labvanced for studying speech perception and language comprehension, all which demonstrate a different capability or feature of the platform.

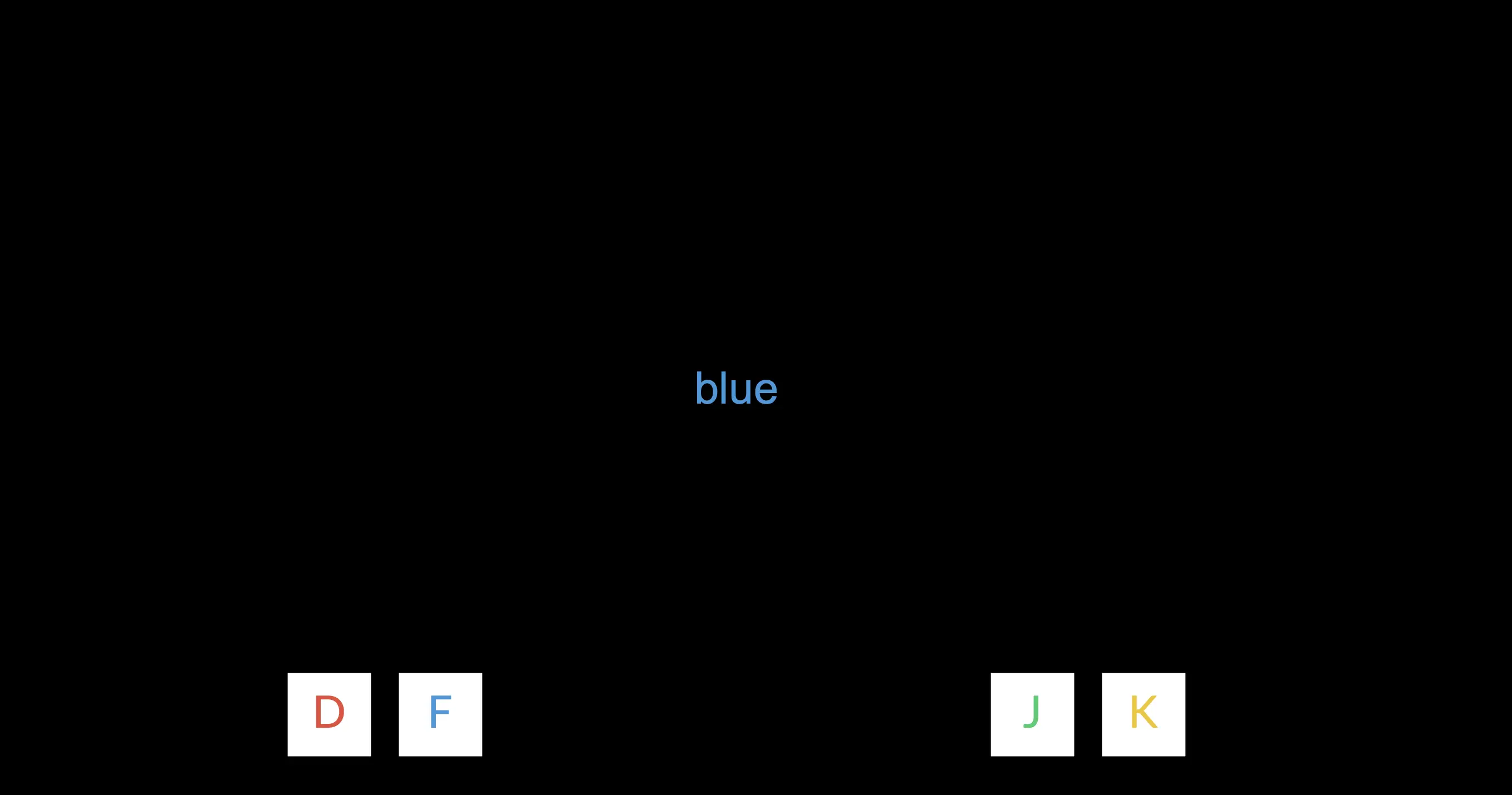

1. Multimodal Stroop Effect Task

The Multimodal Stroop Effect Task is a classic task that challenges participants’ cognitive associations.

In the study, words like ‘blue’ or ‘green’ are shown one by one with a varying text color, only sometimes corresponding to what the written word indicates. This incongruence challenges the participant. Try it out here!

The study prompts the participant to focus on the text color and ignore the text meaning. During the experiment, there are also distracting auditory words spoken, a voice that says one of the 4 featured colors.

In the training session, the participant practices focusing on the text color and clicking the corresponding button. The other two dimensions (spoken word and written-text) are congruent and reflect the target color.

In the example below in the training session, the correct response is ‘F’ because the text color is blue. But the participant is also reinforced because the actual written word is blue and the audio playing automatically also says ‘blue.’

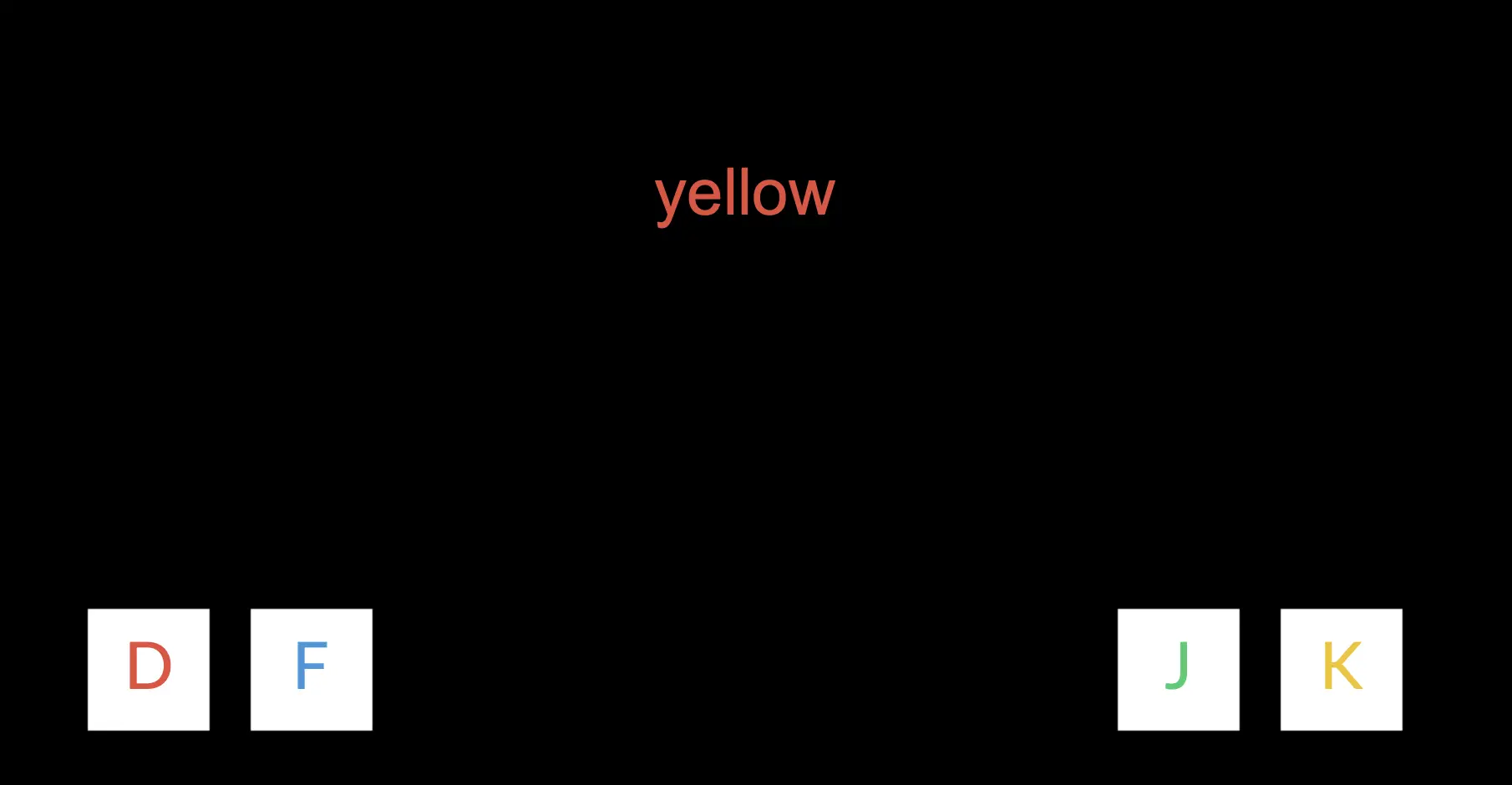

In the experiment, things become more challenging as the three dimensions are incongruent.

In the example below, the correct response is ‘D’ because the color is red, but the written word says ‘yellow’ and the audio voice prompts ‘blue.’

Thus, the participant is challenged to focus and limit the various cognitive associations in order to pick the correct response and override the language written- and spoken- language cues.

📌 Publication Highlight: The influence of language dominance between linguistic and non-linguistic tasks

In this study, conducted in Labvanced, researchers created and implemented a series of linguistic and nonlinguistic tasks in order to determine how language dominance influences interference and facilitation effects in both linguistic and nonlinguistic tasks as well as the relationship between performance on the linguistic and nonlinguistic tasks. For example, the Picture-Word Interference (PWI) Task together with the Nonlinguistic Spatial Stroop Task were administered for this purpose. The researchers suggest that language dominance does modulate performance on both linguistic and nonlinguistic tasks.

Reference: Gálvez-McDonough, A. F., Blumenfeld, H. K., Barragán-Diaz, A., Anthony, J. J. R., & Riès, S. K. (2024). Influence of language dominance on crosslinguistic and nonlinguistic interference resolution in bilinguals. Bilingualism: Language and Cognition, 1-14.

2. Finish the Sentence

This study, published by the UCLA Linguistics Department aims to test how adult native American English speakers finish sentences.

Participants must listen to sentence fragments and then provide a response where their voice is recorded using their computer microphone, completing the sentence fragment into a full sentence.

The participants are prompted to provide an answer using the first thing that comes to mind and without any hesitation.

The general study progress is illustrated below:

- The participant tests the recording feature of Labvanced to ensure their recording works.

- The participant moves to the next screen and clicks ‘Play’ to hear the sentence fragment.

- Then, the participant is prompted to think of a way complete the sentence starting with the fragment they just heard

- The participant clicks the record button and says the whole sentence out loud.

The study aims to increase scientific knowledge about speech and human language. The researchers state that the gathered insights will have positive implications for several areas, including: implementing computer technology, language teaching, and speech pathology treatment.

📌 Publication Highlight: Maze-based sentence completion task

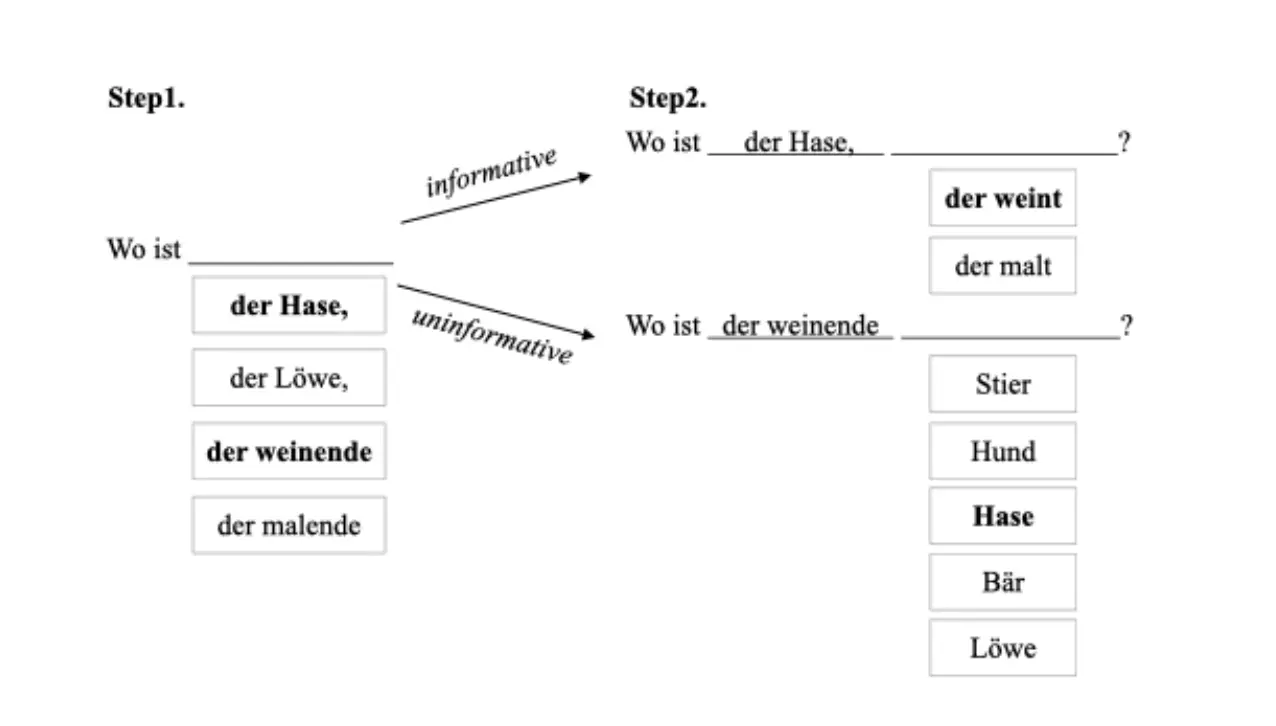

Another approach to sentence completion tasks is to select or input a word, as opposed to completing the sentence by talking (as in the study above). In the study described below, conducted in Labvanced, the researchers administered a maze-based sentence completion task. Participants had to select a description for a given target by consecutively selecting two parts of their expressions from a list of options:

Reference: Li, M., Venhuizen, N. J., Jachmann, T. K., Drenhaus, H., & Crocker, M. W. (2023). Does informativity modulate linearization preferences in reference production?. In Proceedings of the Annual Meeting of the Cognitive Science Society (Vol. 45, No. 45).

3. Dimensions & Sounds

In this speech and language experiment by the Max Planck Institute for Empirical Aesthetics in Frankfurt, the researchers set out to investigate how vocalizations are perceived.

The participants begin by filling out a simple questionnaire about themselves. Then, they are instructed to listen to sounds and vocalizations. After perceiving the audio stimuli, the participants are asked to rate the sound on 2 scales.

This experiment demonstrates how to incorporate a questionnaire at the beginning of the study and then use audio to study human sound perception of vocalizations.

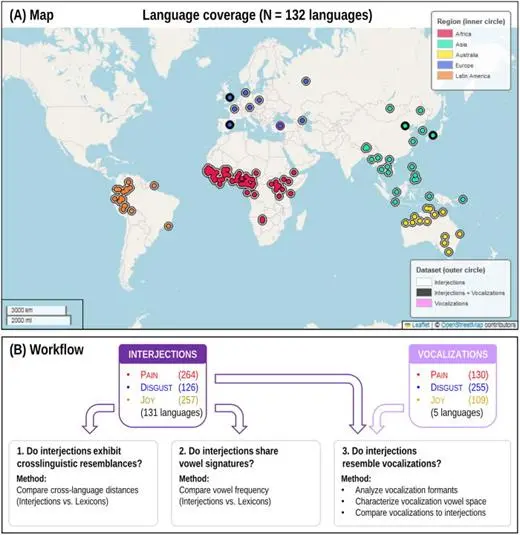

📌 Publication Highlight: Affective interjections and vocalizations across languages

In this research, which utilized Labvanced, the aim was to study, across languages, the nature of vowel signatures in emotional interjections and nonlinguistic vocalizations that express pain, disgust, and joy across languages. The researchers analyzed the vowels and interjections of over 130 languages and came across several interesting findings.

For nonlinguistic vocalizations, all emotions seemed to have distinct vowel signatures: pain prompts open vowels such as [a], joy prompts front vowels such as [i], and disgust schwa-like central vowels. For interjections, pain interjections feature a-like vowels and wide falling diphthongs, whereas joy and disgust interjections do not extend geographically for vowel regularities that extend geographically. These findings suggest that pain is the only affective experience that shows a preserved signature for both nonlinguistic vocalizations and interjections across languages.

Reference: Ponsonnet, M., Coupé, C., Pellegrino, F., Garcia Arasco, A., & Pisanski, K. (2024). Vowel signatures in emotional interjections and nonlinguistic vocalizations expressing pain, disgust, and joy across languages. The Journal of the Acoustical Society of America, 156(5), 3118-3139.

4. Spanish Pronunciation Study

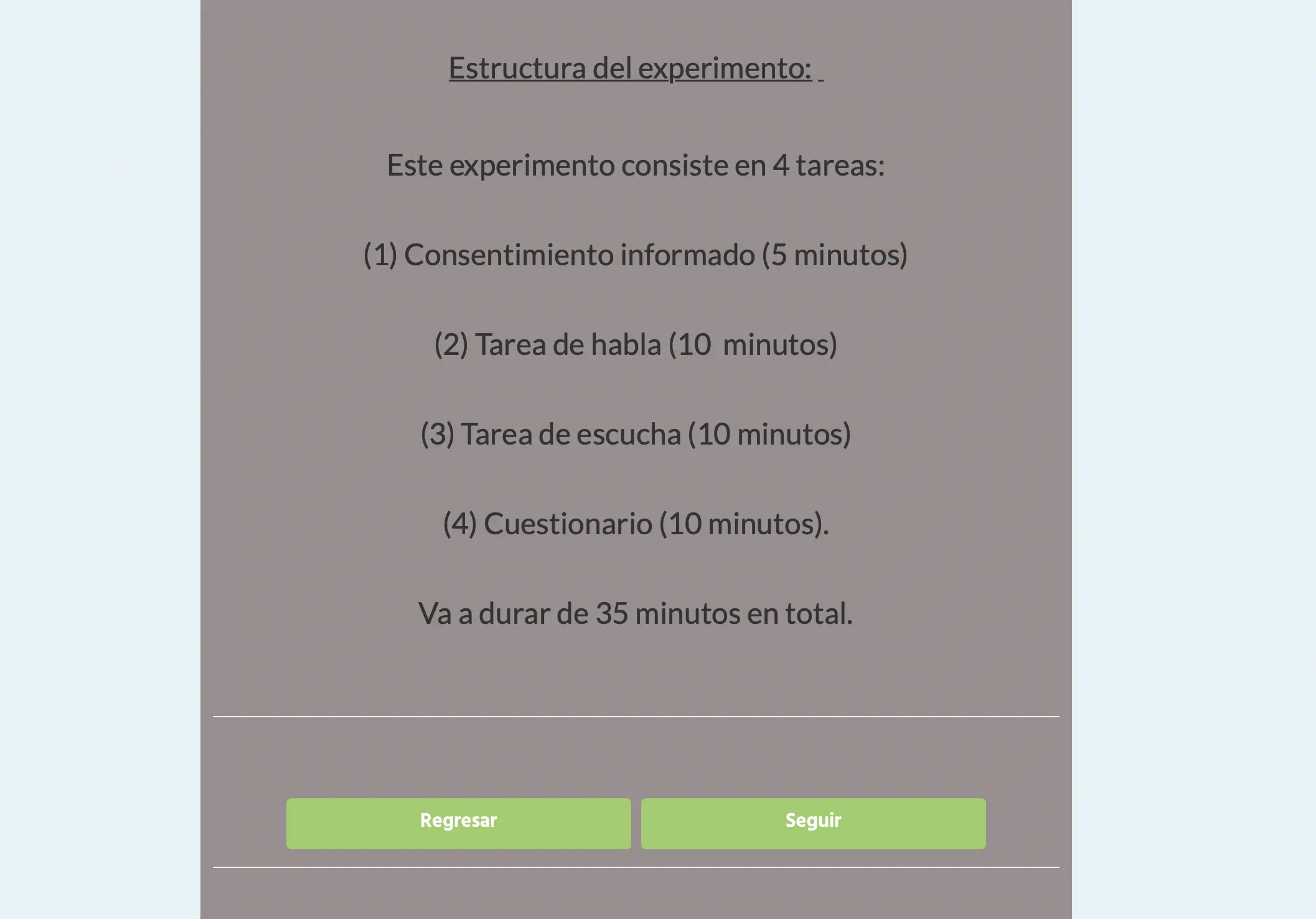

The Spanish Pronunciation Study is one of the many experiments by the University of Toronto published in Labvanced. The experiment is in Spanish, but can also be administered in Portuguese, and tests the participant’s comprehension and language capabilities through speaking and listening tasks.

In this study, the participant goes through information about the experimental procedure. Then, there are 2 short tasks to be completed, about 10 minutes each. The first task is about speaking and reading and the second task is about listening.

At the end, there is a questionnaire so the participant can provide basic information about themselves, as well as any relevant information about their language learning background.

Labvanced is used for many language learning and bilingual studies. Researchers can design their experiment in any language, choose to limit a study only to specific speakers, and share the study internationally so different language speakers can participate from around the globe or keep the study local to examine language learning in a specific group, such as students in a university learning a second language.

📌 Publication Highlight: Prosody of Montevideo Spanish

In this study, the researchers used Labvanced in order to create the first profile of Montevideo Spanish (MS), a Rioplatense variety spoken in Uruguay that has roots in Castilian Spanish and Italian, as well as influences from other languages, including Guarani, Quechua, and Portuguese. This research aimed to provide the first, complete prosodic description of Montevideo Spanish (MS) by analyzing its intonation, rhythm and tempo. The researchers used Labvanced to record participants across different production tasks in order to capture a wide range of naturalistic speech.

Reference: Machado, V., & Escobar, L. (2023). The prosody of Montevideo Spanish: an intonational, rhythmic and tempo description. Canadian Journal of Linguistics.

5. Voice & Well-being

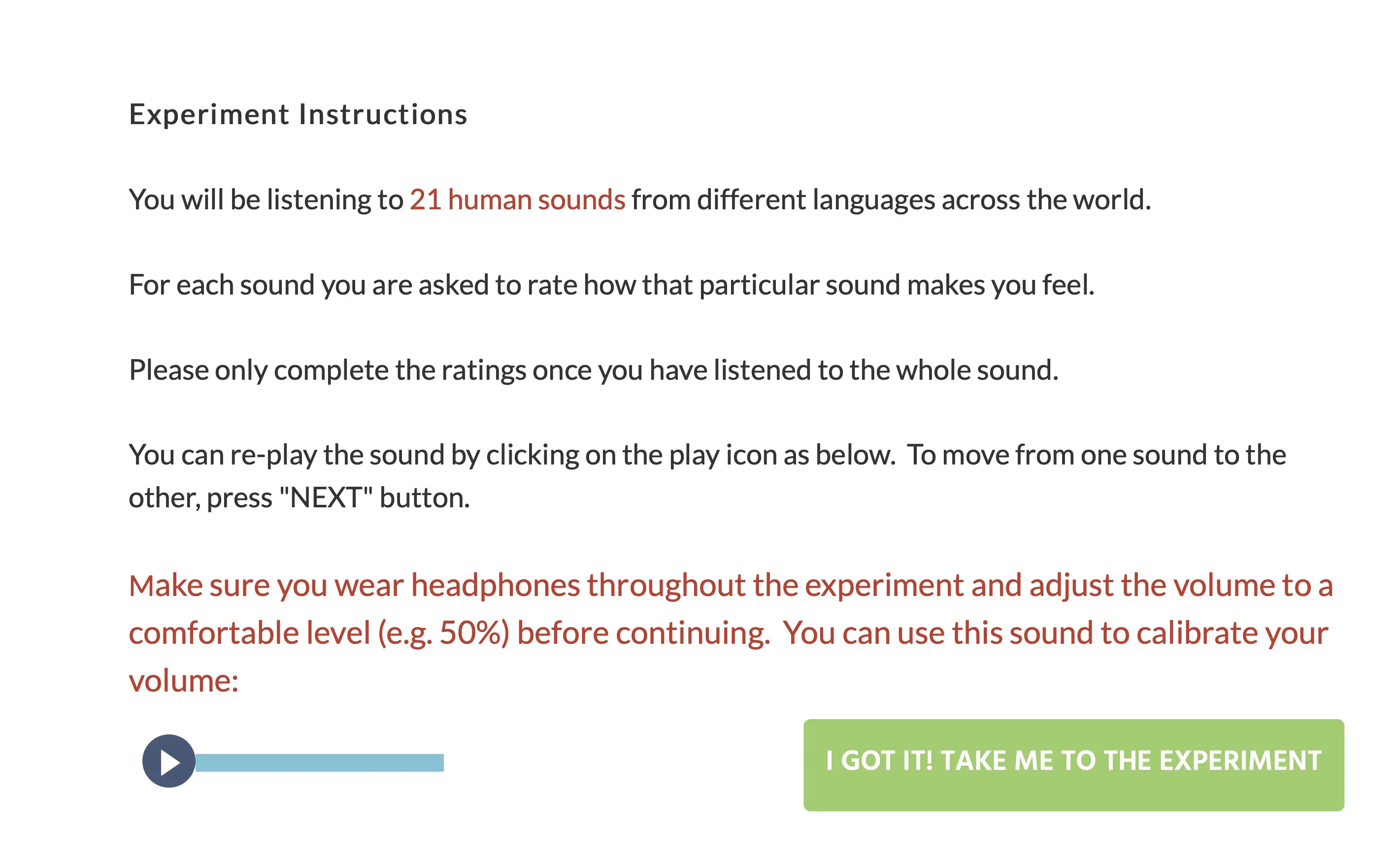

In this study, the relationship between sound perception and feelings is assessed. The participants are prompted to listen to 21 human sounds from all over the world. After hearing this clip, the participant must rate how the sound made them feel using 5-point Likert scales.

The experimental screen opens with instructions of the experiment. Towards the end of the explanation, there is a sound volume adjuster where the participant can adjust and calibrate the audio that will proceed to a comfortable level:

After calibrating and adjusting the sound, the experiment begins.

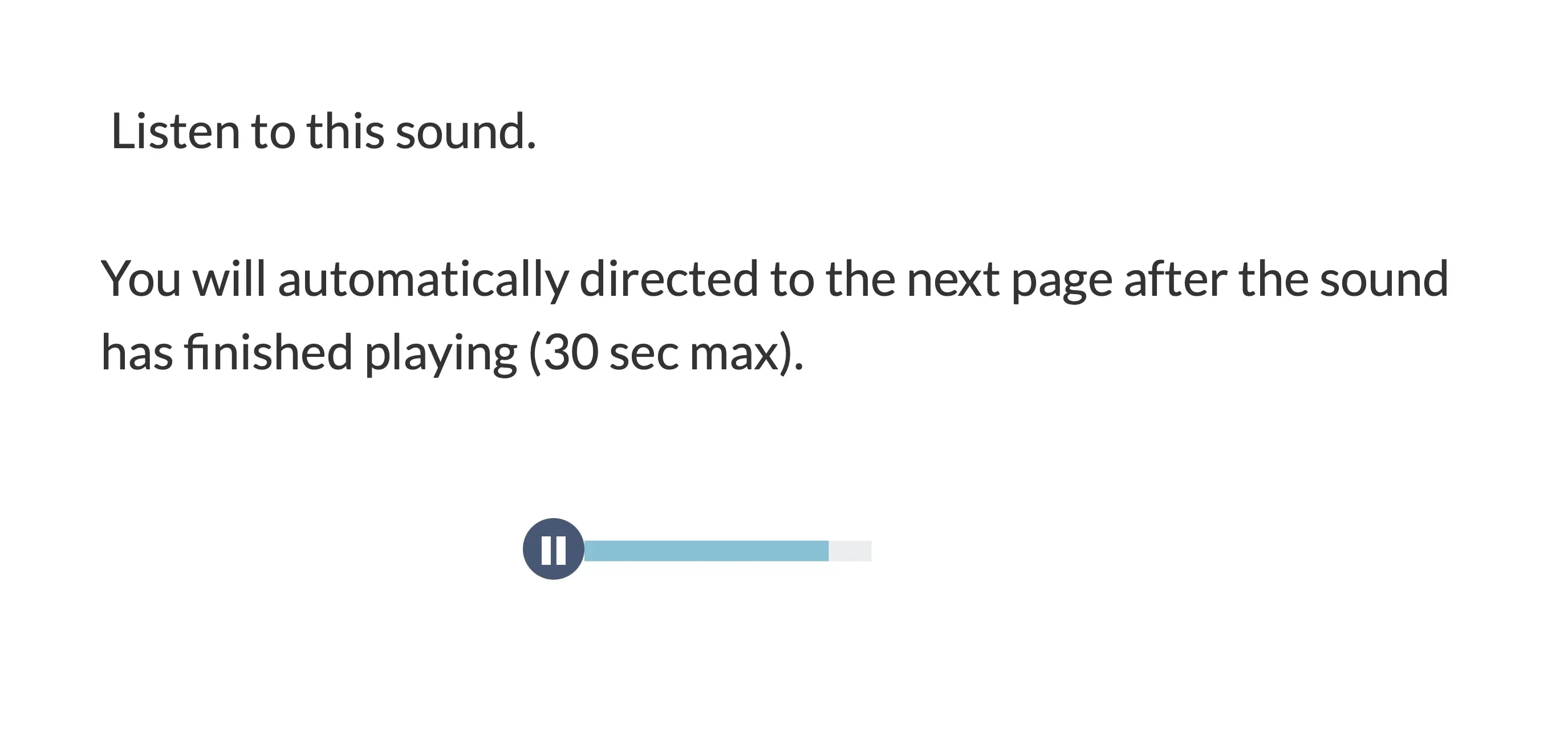

The participant hears a sound that plays and lasts for about 30 seconds:

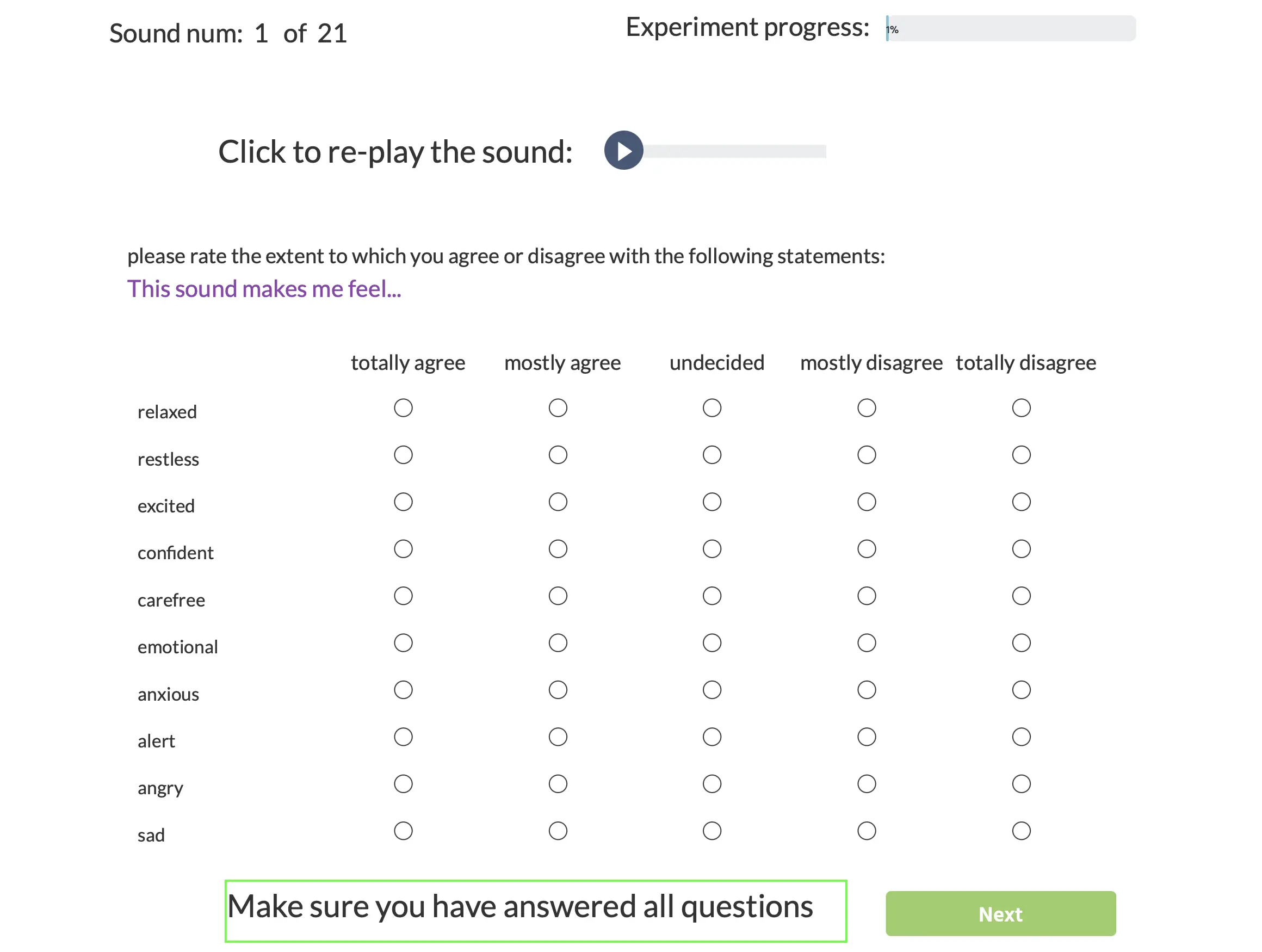

Then, after the sound has been played, the participant is prompted to indicate on a 5-point Likert to what extent certain emotions and feelings (like confidence, sadness, or alertness) were invoked by the audio:

This experiment is a great example of how to present audio recordings and then a questionnaire so the participant can provide a response to the sound, language, or vocalization they perceived.

📌 Publication Highlight: Preferences for singing voice

The study aim was to predict what drives participant’s preferences when ‘liking’ a vocalist by assessing perceptual and acoustic features, an important topic in the area of psychology of music preference.

Labvanced experiment:

- Perceptual ratings were developed for this experiment on bipolar scales ranging from 1 to 7 with contrasting anchor words on each pole asking participants to rate the following: pitch accuracy, loudness, tempo, articulation, breathiness, resonance, timbre, attack/voice onset, vibrato. Forty-two participants rated 96 stimuli on 10 different scales

- 18-item subscale of the Music Sophistication from the Goldsmiths Music Sophistican Index

- Ten-Item Personality Inventory (TPI)

- Reviewed Short Test of Music Preference (STOMP-R)

Key findings: Acoustic and low-level features derived from music information retrieval (MIR) barely explain variance in the participants’ liking ratings. In contrast, perceptual features of the voices achieved around 43% prediction suggesting that singing voice preferences are not grounded in acoustic attributes per se, but more so by features perceptually experienced by the listeners. This finding shows the importance of individual perception when it comes to the psychology of music preference. Read more.

Reference: Bruder, C., Poeppel, D., & Larrouy-Maestri, P. (2024). Perceptual (but not acoustic) features predict singing voice preferences. Scientific reports, 14(1), 8977.

6. Lexical Decision Tasks

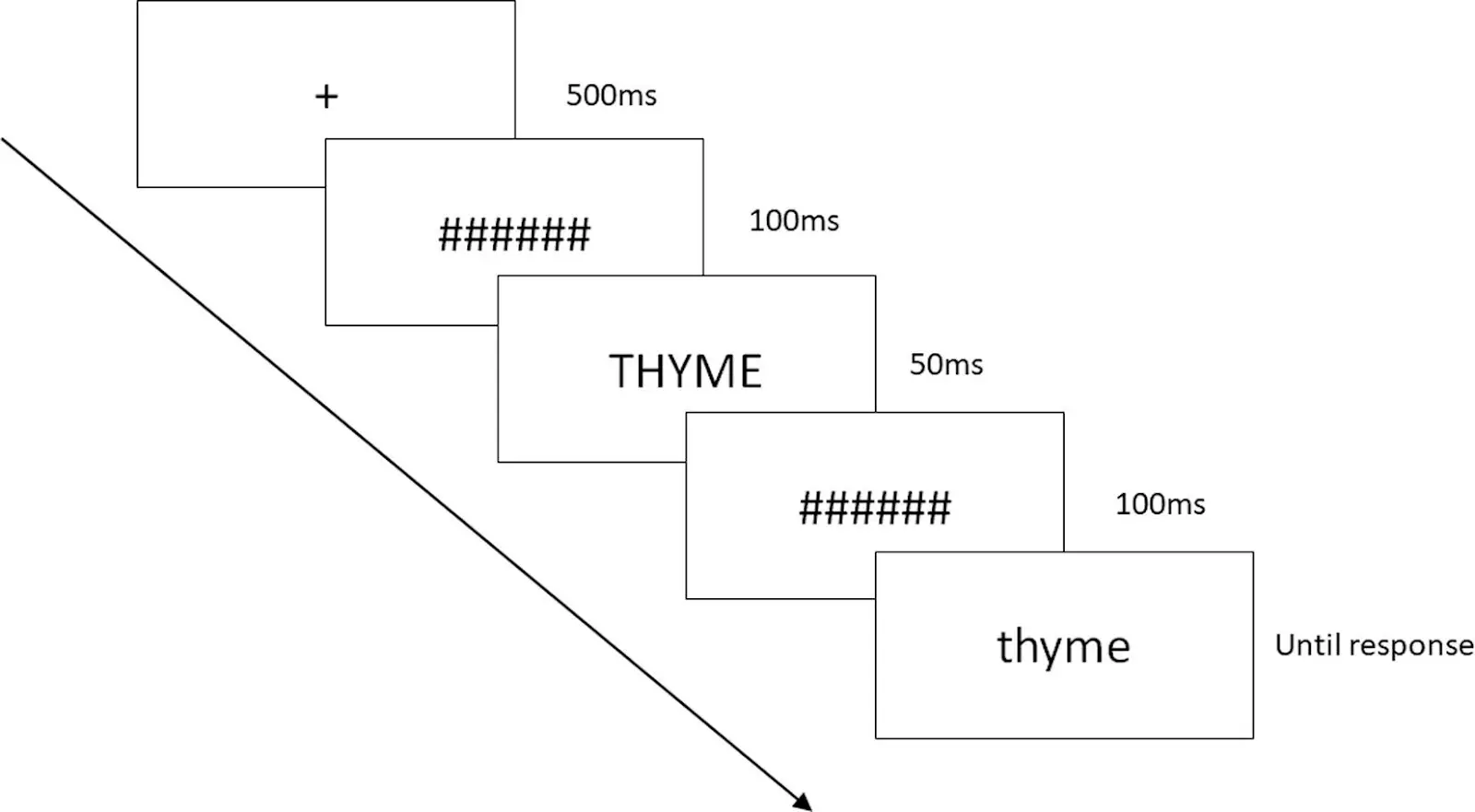

The Lexical Decision Task (LDT) is another great example of a language-based task where participants are asked to indicate whether a presented string of letters is a word or a non-word in the target language. There are several variations of the Lexical decision task due to its popularity in the field. Learn more about LDT.

📌 Publication Highlight: Acquired anosmia and odor-related language comprehension

In this study conducted in Labvanced, researchers wanted to assess whether acquired anosmia (the loss of the sense of smell that comes later in life) impacts the comprehension of odor-related language. The researchers administered a series of tasks, in order to get a full picture of odor-related memory in anosmics and controls. Interestingly, the study concluded with no evidence that acquired anosmia impairs the comprehension of odor or taste words, but emotional associations with odor and taste words were altered in anosmics, with more positive ratings. Overall, these findings suggest that language processing may in some cases be independent of the ability to have an olfactory sensory experience.

Reference: Bruder, C., Poeppel, D., & Larrouy-Maestri, P. (2024). Perceptual (but not acoustic) features predict singing voice preferences. Scientific reports, 14(1), 8977.

7. Semantic Networking

In this psycholinguistics study by the Temple University, word relationships are assessed. The experiment is designed to test how strong word relationships are (e.g., semantic and phonological).

The participants see a word, then they see a letter sequence. If the letter sequence means something in the English language, the participant is asked to click ‘Y’ on the keyboard but if the letter sequence does not mean anything, then ‘N’ should be pressed.

The design is simple and straightforward, but it demonstrates how to collect participant responses using button presses after presenting words visually in a particular sequence.

📌 Publication Highlight: Eye tracking, language and visual attention

This study investigated how semantic information, conveyed through words or pictures, influences visual attention in a spatial cueing task. The researchers used Labvanced’s webcam eye-tracking as a validity check to ensure participants maintained central fixation.

![]()

The experiment utilized a repeated-measures design and involved two parts:

- Experiment 1: Participants viewed either real words or pseudowords as primes, followed by a spatial cue directing them to a target. The aim was to assess how these primes influenced target detection speed.

- Experiment 2: Participants were presented with real objects or pseudo-objects as primes, which either matched or mismatched the target. This experiment aimed to explore the effects of semantic and perceptual congruency on attentional engagement and target detection.

Data collected: Reaction times, eye-tracking data (participants' fixations and attention patterns), and details on the types of primes (real words, pseudowords, known objects, unknown objects) and their match status (match vs. mismatch) with the targets.

Findings: Semantic knowledge, conveyed through real words and objects, significantly facilitates faster target detection and enhances attentional capture, even when the primes do not provide spatial information about the targets.

Reference: Calignano G, Lorenzoni A, Semeraro G and Navarrete E (2024) Words before pictures: the role of language in biasing visual attention. Front. Psychol. 15:1439397.

8. Speech-in-Noise Test

In this speech-in-noise study, researchers asked participants to take part in an experiment in Labvanced to determine subjective and objective measures of listening accuracy and effort. 67 participants, including 42 with normal hearing and 25 with hearing loss, completed the Effort Assessment Scale (EAS) and a sentence recognition task. BKB sentences were presented in speech-shaped noise at signal-to-noise ratios (SNRs) of -8, -4, 0, +4, +8, and +20 dB. Participants repeated the sentences aloud, and their responses were recorded via webcam and scored by research assistants. The study used several outcome measures to assess accuracy and listening effort, including objective intelligibility, subjective intelligibility, subjective listening effort, subjective tendency to give up listening, and verbal response time (VRT). Subjective listening effort was the first measure to show sensitivity to worsening SNR, followed by subjective intelligibility, objective intelligibility, subjective tendency to give up listening, and VRT.

Reference: Wiggins, I. M., Stacey, J. E., Naylor, G., & Saunders, G. H. (2025). Relationships between subjective and objective measures of listening accuracy and effort in an online speech-in-noise study. Ear and hearing, 10-1097.

9. Adult Reading Test

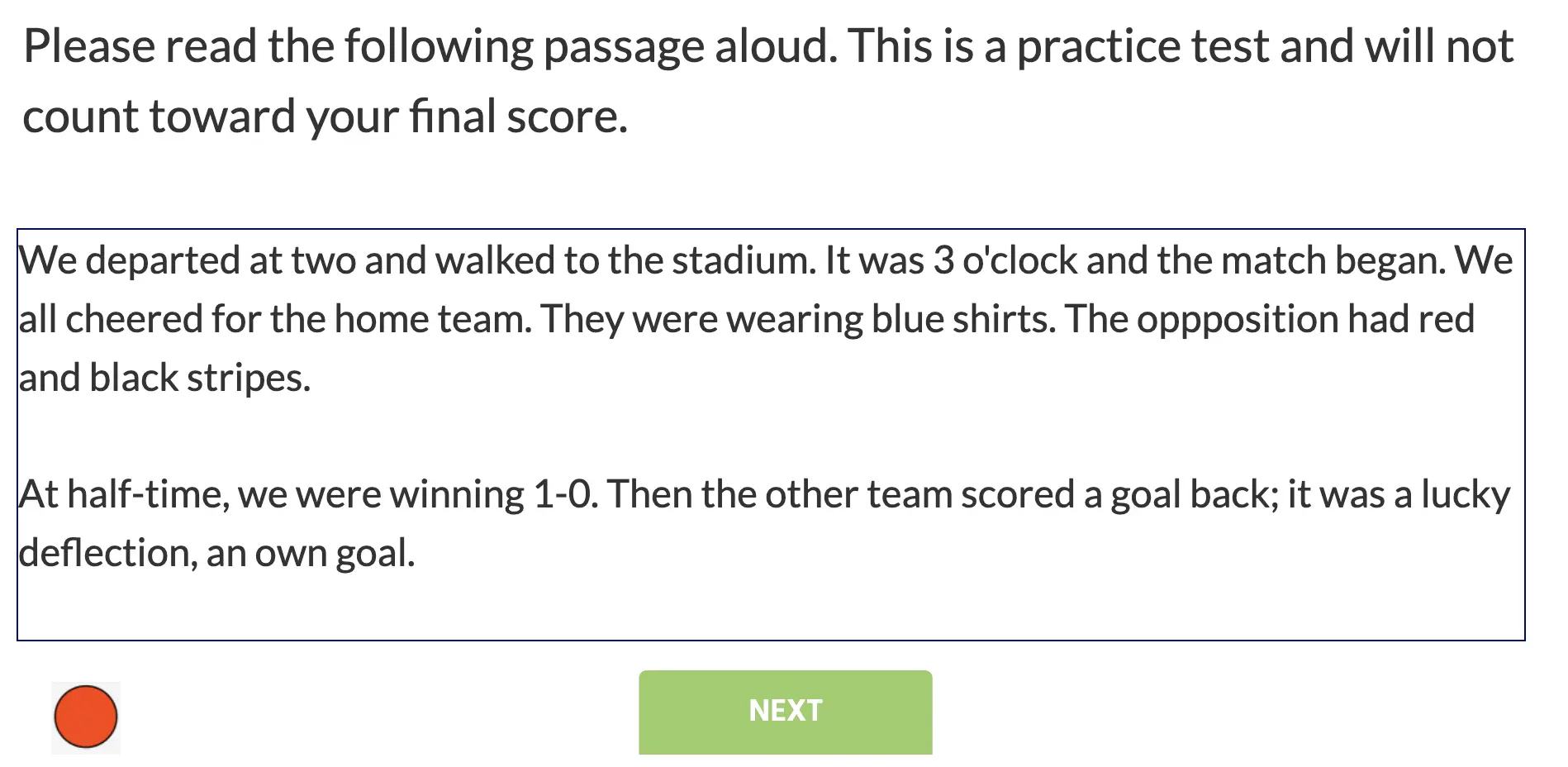

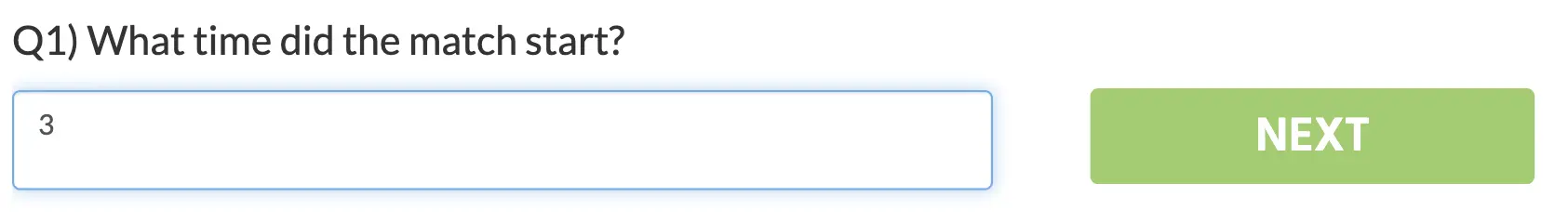

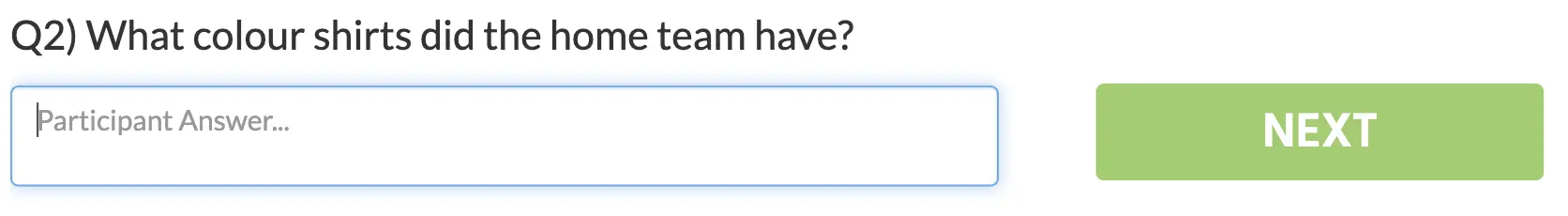

The Adult Reading Test by the Nottingham Trent University tests one’s ability to read a text passage aloud, making use of Labvanced’s voice recording feature, and then answer questions about the passage.

Before starting the training session, the study also asks the participants to provide their email address so that responses from a previous section in Labvanced can be linked.

In the training session, the participant must record themselves reading the prompted passage out loud:

After the voice recording has been completed, a series of questions about the passage follow:

The Adult Reading Test captures several different types of measurements, from voice recordings to answers from questionnaires. It’s a great way to measure language comprehension and mastery and can be adapted to other languages and population groups.

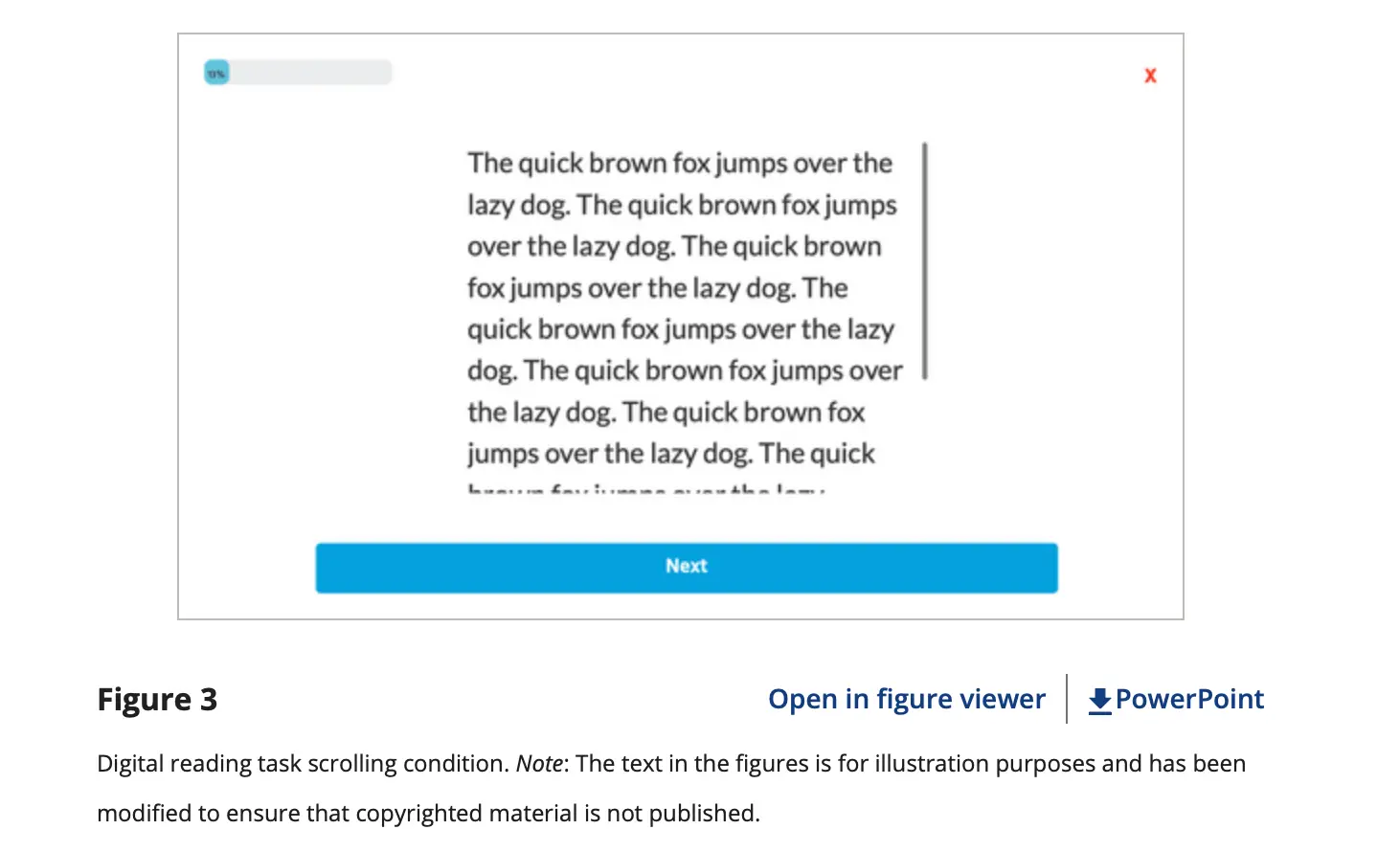

📌 Publication Highlight: Digital reading comprehension

This study aimed to understand how digital reading environments, with features such as scrolling and hyperlinks, affect children's reading comprehension.

The researchers used Labvanced for:

- Task Design: It included the integration of different conditions (clicking, scrolling, hyperlink, and combined).

- Programming Navigation Features: Navigation buttons (e.g., next, back, back to story) and hyperlink functionalities to mimic common digital reading experiences.

- Participant Monitoring: Facilitation of remote participation, allowing children to complete the tasks from home while being monitored by research assistants via video conferencing tools.

- Data Collection: Metrics, such as the time participants spent reading each passage, the time taken to reference the text while answering questions, and the frequency of back button activations.

The findings showed that when children interacted with hovering hyperlinks for definitions, it negatively impacted their reading comprehension, whereas scrolling did not have any adverse effects on comprehension.

Reference: Krenca, K., Taylor, E., & Deacon, S. H. (2024). Scrolling and hyperlinks: The effects of two prevalent digital features on children’s digital reading comprehension. Journal of Research in Reading.

10. Prosodic Awareness Task

A prosodic awareness task is designed to assess an individual's ability to recognize and manipulate the prosodic features of language. In the demo below in Labvanced, participants are asked to pronounce a word and then identify where the stressed syllable in that word is.

Additional Examples

Editing Text Dynamically

In some cases, you may be interested in determining how participants actively edit written text. The video below shows how you can track real-time text editing, including mouse clicks, keypresses, and paragraph changes using Labvanced.

Try out this Editing Text Dynamically demo, as shown above, for yourself by clicking the Participate button or Import it to your account.

Audibility Screening Measures

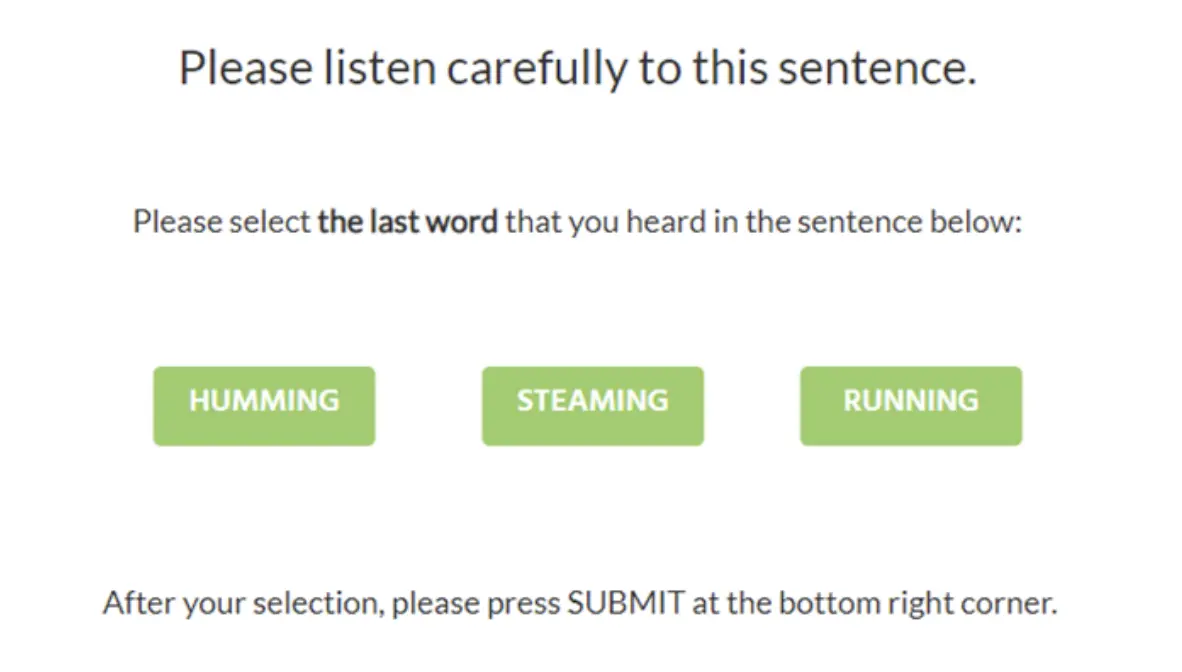

In certain cases, it may be important to check the basic audibility of auditory stimuli before proceeding to the main experiment trials. In this study comparing the feasibility of remote versus face-to-face neuropsychological testing for dementia research.

The research began by asking participants to listen to a set of 10 sentences from the Bamford-Kowal-Bench (BKB) list in Labvanced.

In the example above, the sentence spoken was “The car engine is running” and the participants had to indicate the last word that was heard. For each sentence, there were two foils displayed alongside the target, both of which made sense in the sentence when replacing the target. Also, one of the foils was also selected to loosely rhyme with the target word (for example here that would be “humming”).

Reference: Requena-Komuro, M. C., Jiang, J., Dobson, L., Benhamou, E., Russell, L., Bond, R. L., ... & Hardy, C. J. (2022). Remote versus face-to-face neuropsychological testing for dementia research: a comparative study in people with Alzheimer’s disease, frontotemporal dementia and healthy older individuals. BMJ open, 12(11), e064576.

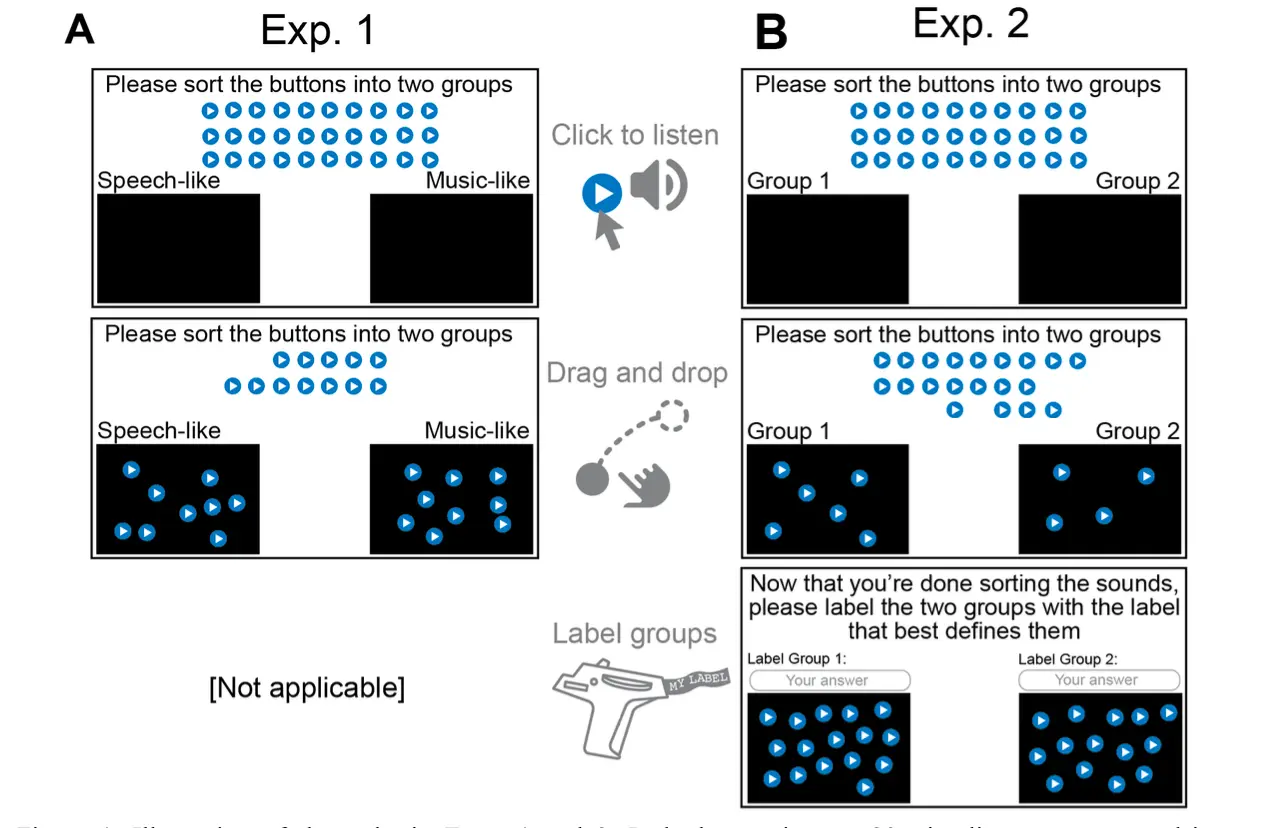

Dragging and Dropping Stimuli for Categorization

This study conducted research on music sounds by determining how participants would classify clips as being ‘speech-like’ or ‘music-like’.

Labvanced experiment: Using 30 recordings of recordings of dùndún drumming (a West-African drum which is also used as a speech surrogate), participants were asked to classify the recordings. The researchers aimed to determine potential predictors of music-speech categories. 15 of the recordings were treated as ‘music’ and consisted of Yorùbá àlùjó (dance) rhythms, while the other 15 recordings were ‘speech surrogate,’ containing Yorùbá proverbs and oríkì (poetry). The participants had to drag & drop to categorize the audio stimuli which the participants could play freely to listen (see image below). Different participants took part in each experiment.

In the first experiment, the categories were provided, namely ‘speech-like’ and ‘music-like’. In the second experiment, the participants had to determine on their own what the two categories would be for differentiating the sounds and subsequently label them.

Findings: Hierarchical clustering of participants’ stimulus groupings shows that the speech/music distinction does emerge and is observable, but is not primary. Further analysis of the free-response task showed that the labels assigned by the participants converge with acoustic predictors of the categories. Such a finding supports the effect of priming in discriminating between music and speech, and thereby sheds a new light on the mechanisms of categorizing of such common auditory signals.

Reference: Fink, L., Hörster, M., Poeppel, D., Wald-Fuhrmann, M., & Larrouy-Maestri, P. (2023). Features underlying speech versus music as categories of auditory experience. Pre-print.

Conclusion

Together these 10 linguistic experiments plus the additional examples are great representations not only of what you can do in Labvanced but also how researchers from various universities are studying speech and language but also perception using online experiments to record data and responses.