Visual Search Task: A Comprehensive Guide for Researchers

Visual search tasks involve identifying a target among a variety of distractors and play a vital role in studying how humans process visual information. These tasks also offer valuable insights into the cognitive functions underlying visual search.

Table of Contents

Here, we will explain the visual search task and its variations, discuss the cognitive processes involved (from attention to processing speed), as well as delve into data collection, and explain its applications in cognitive psychology and clinical psychology research, and more!

- Social Scenarios & Moral Reasoning – Theory of mind and social cognition Description: Measure social understanding through interactive scenarios. (Link placeholder: “View social tasks”)

- Gamified Cognitive Tasks for Kids – Engagement + data quality Description: Fun, game-like tasks to maintain attention. (Link placeholder: “Explore gamified tasks”)

Visual Search Tasks - Explained

Visual search tasks is a type of attention task in which participants are asked to detect a target stimulus, whose presence and location are unknown, among a set of distractors in a visual field, as quickly and accurately as possible (Chesham et al., 2019).

The fundamental elements of visual search tasks are (Wickens, 2023):

- Target: A specific stimulus, object, or feature that is to be identified or located.

- Distractors: Non-target elements that increase the difficulty of the search.

- Search field: The region of space over which the search is carried out.

Visual Search Tasks aim to study different cognitive functions, primarily attention and perception.

Common Types of Visual Search Tasks in Psychology Research

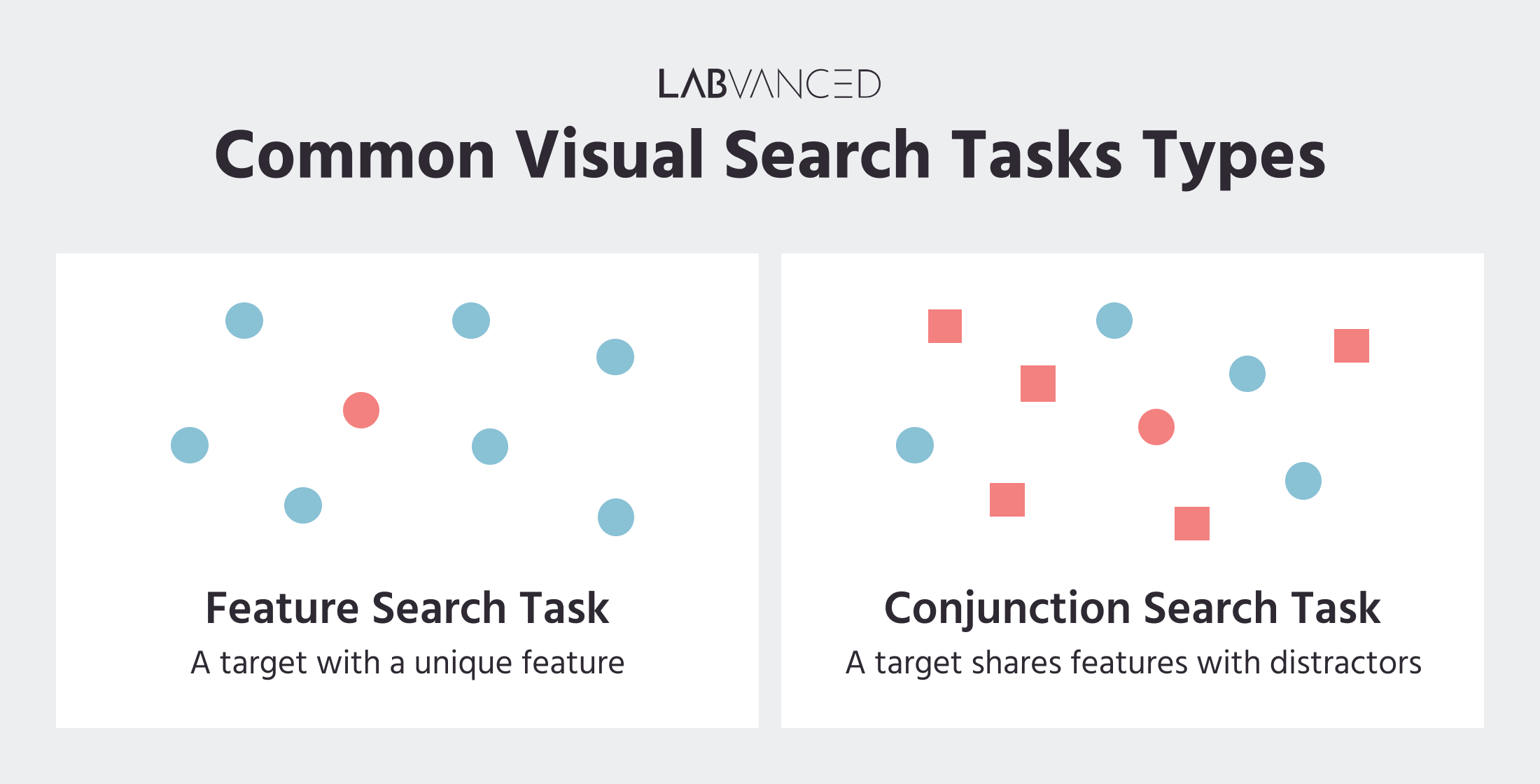

Visual search tasks can be conceptualized into two broad categories:

- Feature Search Tasks: The simplest version of visual search tasks, the participant is instructed to find the unique target. This target typically 'pops' out, like a butterfly amongst snails.

- Conjunction Search Task: A much more advanced version where participants must spend significantly more time and effort focusing on identifying the target stimuli as it shares multiple features with distractors, like identifying an actress who is pictured together with her stunt doubles.

Feature Search Task (left) and Conjunction Search Task (right).

Feature Search Tasks

Feature search tasks involve searching for a target with a unique feature that is not shared by surrounding distractors. The target often pops out in the visual field and can be located without much effort. For example, detection of the red circle among distracting blue circles, as shown in the image above on the left.

The common mode of visual search adopted in these feature search tasks is the Parallel Search. In this mode, all items are processed at the same time allowing for a quick detection. The detection time is generally independent of the number of distractors (so, even if the number of distractors increases, it would not influence the time taken to locate the target.) This is a big difference between the feature search task and the conjunction search task which utilizes a Serial Search mode, as discussed below.

Conjunction Search Tasks

In a conjunction search tasks, the targets share one or more features with the distractors, requiring more effort in order to identify the target based on the combination of features. For example, identifying a red circle among distracting blue circles and red squares, as shown in the image above on the right.

In the example below of a Visual Search Task online in Labvanced, the target is the vertical, red rectangle and the features it shares with the surrounding distractors include shape, color, and orientation.

The mode of visual search utilized here is the Serial Search Mode. Since the targets share features with distractors each item has to be processed one at a time, making it harder to locate the target. The detection time is generally dependent on the number of distractors (as the number of distractors increases, the time taken to locate the target also increases).

Data Collection in Visual Search Studies

The data collected through visual search tasks contribute to findings in diverse areas of research.

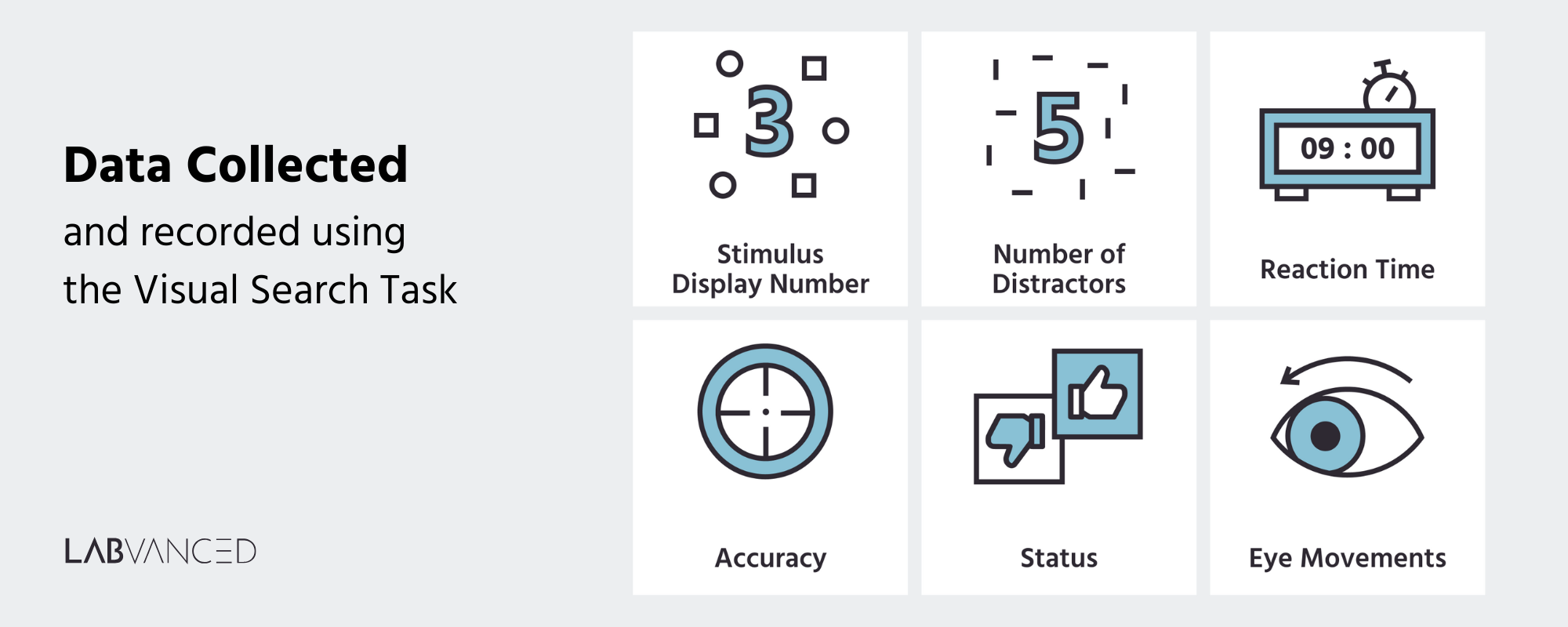

Here are the key types of data collected:

- Stimulus Display Number: The precise position (usually the line/ coordinate number) where the target stimuli appears on the screen.

- Number of Distractors: The total number of non-target stimuli in the visual search field.

- Reaction Time: The amount of time taken by a participant to locate the target and respond.

- Accuracy: It is usually measured by the following:

- Correct responses/ hits: The number of targets found.

- Omission mistakes: The number of targets missed.

- Ommission mistakes: The number of false alarms.

- Status: Indicates whether the participant's response was correct, incorrect, or too slow.

- Eye Movements: Metrics such as fixation duration, saccades, and gaze patterns are collected using Eye Tracking.

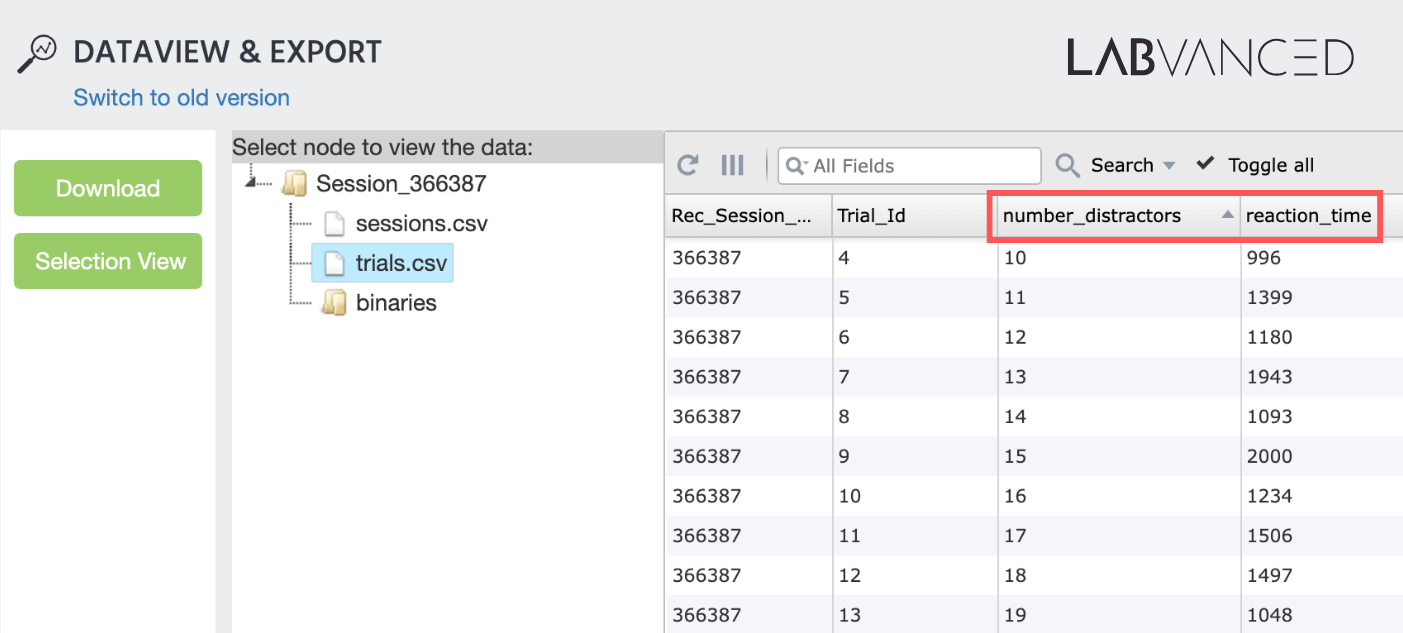

The image above shows an example of how data recorded from a visual search task in Labvanced can look like.

What is Labvanced?

Labvanced is a powerful platform where you can create psychology-based experiments (without having to code) and collect data... all the while having access advanced features such as peer-reviewed webcam eye-tracking and multi-user study support via web and native desktop/mobile applications!

Possible Confounds to Consider

Whether you are administering the visual search task online or in a lab, there are a few confounds worth keeping in mind:

- Executive working memory load: Executive working memory load refers to the mental effort wherein multiple pieces of information is managed and manipulated simultaneously while carrying out a task. Working memory is challenged in visual search tasks when there are many distractors present, challenging the participant to be efficient and not scan the same areas twice. When working memory is overloaded, it makes it harder to focus on finding a target, increasing errors, and reducing memory of the target afterward (Nachtnebel et al., 2023).

- Search Direction: Tasks requiring left-right search generally take shorter time to locate the target compared to other searches such as in vertical, diagonal or random directions (Radhakrishnan et al., 2022).

- Audio distractors: The presence of audio distractors significantly increases visual search time, indicating that background noise could disrupt the focus needed to complete search tasks. This disruption could be due to the competition for cognitive resources to process the visual information and manage the auditory stimuli (Radhakrishnan et al., 2022).

- Familiarity: When encountered with familiar objects or logos during a visual search task, it could significantly decrease search time. Familiarity enables quicker processing of items and it also reduces the mental load required to identify a target, thus speeding up visual search times (Qin et al., 2014).

Associated Cognitive Functions

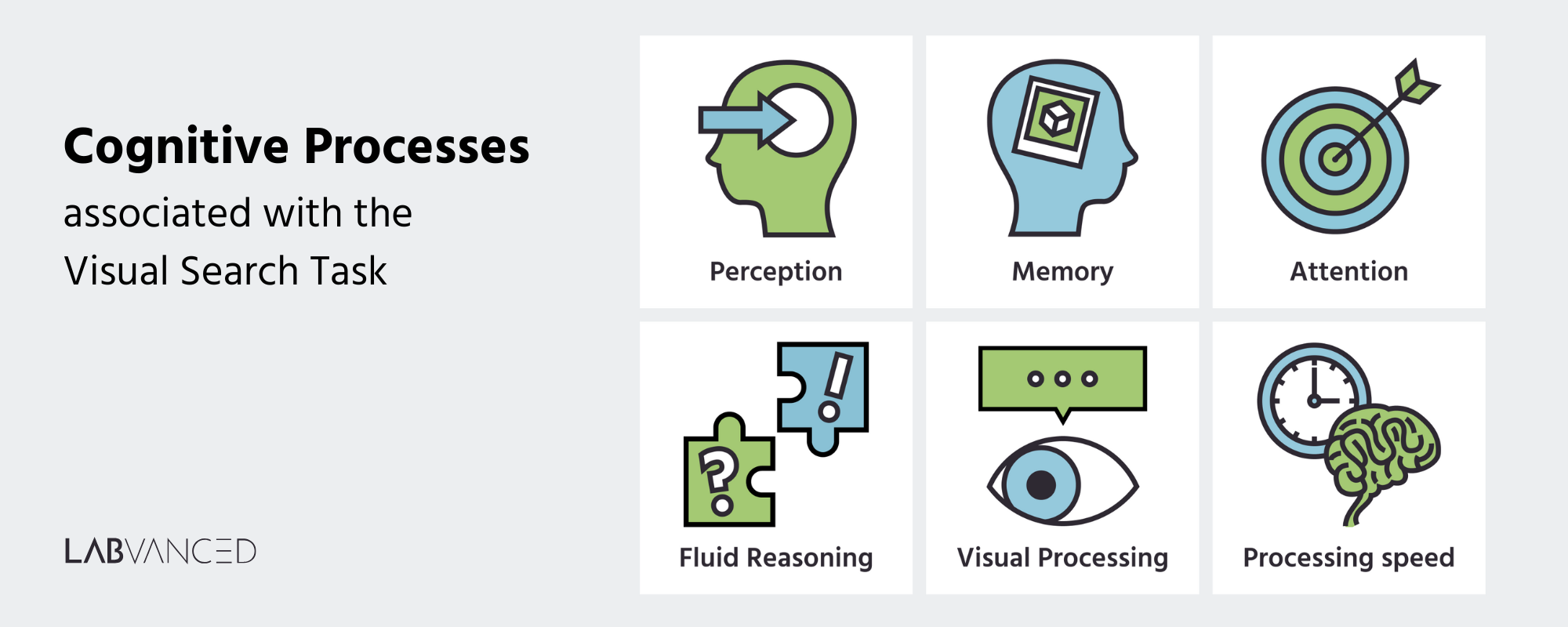

Cognitive functions play a crucial role in the efficient and effective performance of visual search tasks and thus are a major topic of interest in cognitive psychology research.

Below are some cognitive functions underlying these tasks.

- Perception: Perception helps in organizing sensory information by prioritizing relevant features, thereby enabling faster recognition and response. With efficient visual perception, participants can achieve better task performance (Lin & Qian, 2023).

- Memory

- Short-term Memory: Short-term memory (the capacity to store a small amount of information temporarily) allows individuals to quickly store and process information, using implicit memory (a form of short-term memory) to respond faster to frequent stimuli by accumulating memory traces over time (Maljkovic & Martini, 2005).

- Working Memory: Higher working memory capacity helps individuals manage cognitive resources more effectively during a visual search task, especially in dual task scenarios (a visual search task performed along with another cognitive task) (Redden et al., 2022).

- Long-term Memory: Long-term memory (the store of prior events and knowledge) enables the retrieval of past visual experiences during visual search tasks and this further guides current search behaviours. This also aids the participants in quicker identification and selection (Friedman et al., 2018).

- Attention

- Selective Attention: Selective attention plays an important role in visual search tasks by allowing individuals to focus on specific items or features within a scene. This helps in efficient identification of targets and rejection of irrelevant stimuli (Wolfe, 2021).

- Sustained Attention: Visual search tasks require continuous engagement, and sustained attention ensures a consistent allocation of attentional resources throughout the task. This minimizes errors, such as overlooking targets, thereby enhancing the efficiency of visual search (Adam & Serences, 2022).

- Shifting Attention: As the number of search set size increases, the demand for serial shifting of attention also increases to process the multiple stimuli. Shifting attention enables participants to effectively shift attention across various stimuli, regardless of whether it is performed in a parallel or serial manner (Lee & Han, 2020).

- Divided Attention: When two target stimuli are located across widely separated spatial locations, there is a need to process information from both locations. Divided attention is crucial for efficient visual search performance in these cases where processing information simultaneously from multiple spatial locations is required (Davis et al., 2003).

- Fluid Reasoning: Also known as fluid intelligence, it refers to the ability to solve problems that cannot be solved with previous learning. Studies have indicated that it enables individuals to exhibit efficient eye movement behaviours during visual searches. Individuals with higher fluid reasoning also demonstrate improved accuracy and speed in identifying targets (Wagneret al., 2024).

- Visual Processing: Visual processing is the cognitive function that enables individuals to interpret and understand visual information and thereby recognize patterns, and focus attention on relevant stimuli while ignoring distractors (Wagneret al., 2024).

- Processing Speed: It is a vital cognitive function in visual search tasks, as it enables individuals in swiftly processing visual information leading to quicker target identification among distractors. Faster processing speed supports rapid decision-making and further enhances performance in real-world visual search scenarios (Wagneret al., 2024).

Variations in Visual Search Task Designs

Visual search tasks can be administered online or in the lab and they can vary significantly in their complexity, with the main differences being in the types and numbers of stimuli used, as well as the target-distractor similarity.

Here are a few variations of the task:

- Continuous visual search tasks: The search field in this variation would include multiple targets and distractors (Hokken et al., 2022).

- Single-frame search tasks: In this task, the observer is required to make a simple yes/no decision on whether the target is present (Hokken et al., 2022).

- Real-world visual search tasks: This includes tasks set up in real world spaces, rather than using a computerized screen. A study utilized real LEGO blocks for the task (Sauter et al., 2020).

- Sky Search: It is a subtest of the TEA-Ch assessment battery. In this variation, the observer is required to locate specific spaceships on a large piece of paper that is filled with similar-looking decoy spaceships (Nasiri et al., 2023).

- Letter Cancellation Task: This variation of the visual search task requires participants to identify and mark 60 target letters 'A' distributed among other distracting letters (Rorden & Karnath, 2010).

- TMM3 puzzle game: This variation integrates visual search and gaming. Targets are always present, requiring the players to continuously scan and match identical tiles based on features like color and shape (Chesham et al., 2019).

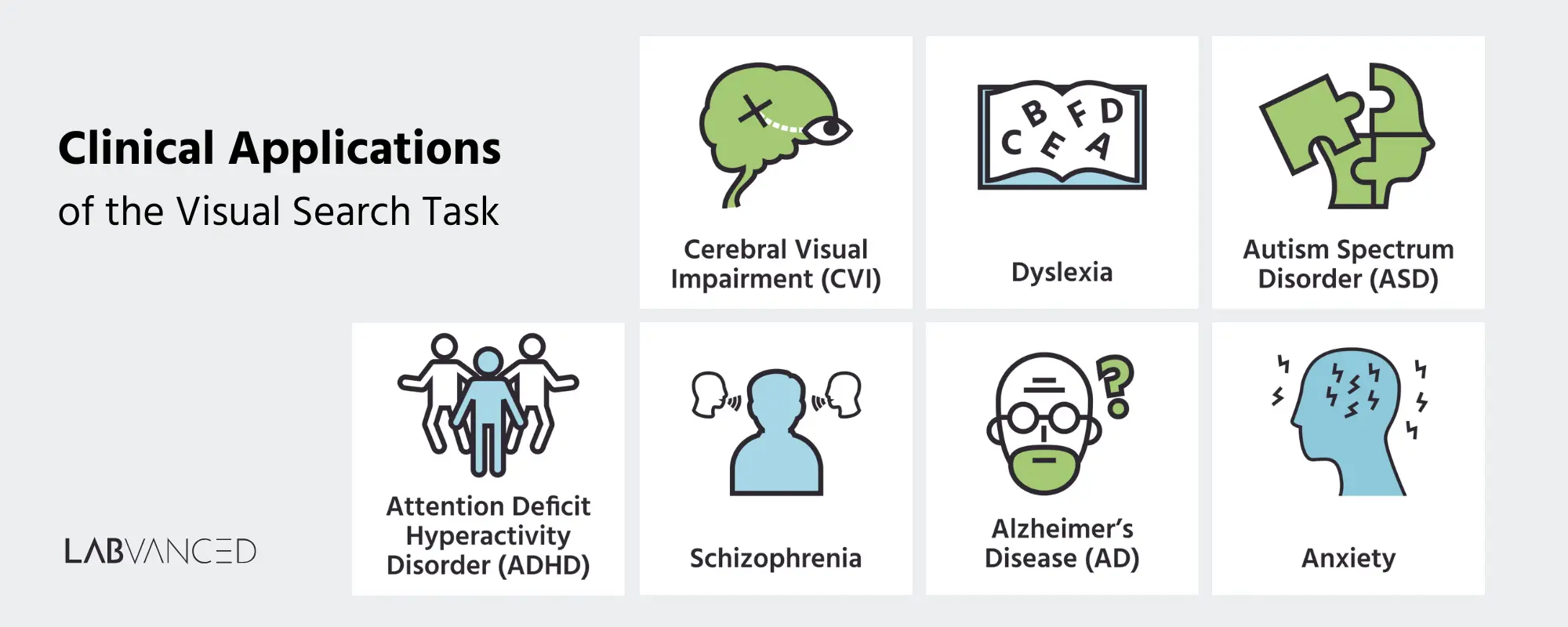

Clinical Applications of Visual Search Tasks

Visual Search Tasks expand beyond the world of cognitive psychology and play a crucial role in clinical psychology as well, due to their important role in understanding the various attentional and perceptual processes underlying different neurodevelopmental and psychological conditions.

Given below are few examples:

- Attention Deficit Hyperactivity Disorder (ADHD): Visual search tasks are an excellent measure of selective attention, an area that is often impaired in ADHD. These tasks help in identifying response-related deficits in individuals and thereby understand the cognitive and behavioral characteristics in ADHD (Mason et al., 2003).

- Cerebral Visual Impairment (CVI): Visual selective attention dysfunctions (VSAD) is a common condition found in children with CVI. Visual search tasks reveal conditions of slower reaction time and reduced accuracy in CVI, enabling targeted interventions in this population (Hokken et al., 2022).

- Dyslexia: Dyslexia (a learning disability characterized by difficulty processing written language and reading), can be differentiated from other neurodevelopmental conditions using visual search tasks. Children with dyslexia often exhibit difficulties with rapid and accurate processing of visual information, which can affect their performance in visual search tasks (Hokken et al., 2022).

- Autism Spectrum Disorder (ASD): Visual search tasks help in uncovering and understanding the neurodevelopmental challenges faced by individuals with ASD. A study showed that individuals with ASD showed patterns in visual search task performance, such as in fixation durations and post-search processes (Canu et al., 2021).

- Schizophrenia: Studies show that individuals with schizophrenia show significant impairments in visual search task performance, such as increased fixation time and variability in search patterns. These findings aid in understanding the attentional and perceptual characteristics of schizophrenia in depth (Canu et al., 2021).

- Alzheimer’s Disease (AD): Visual search tasks in the Alzheimer's population could provide insights into cognitive decline and attentional impairments. Research shows that individuals with AD demonstrate significant deficits in visual search efficiency, such as slower reaction times, increased fixation durations, and difficulties in accurately identifying target stimuli amidst distractors (Pereira et al., 2020).

- Anxiety: Visual search tasks have been utilized to assess the impact of anxiety on attentional control and processing efficiency during the task. They provide insights into how anxiety influences various cognitive functions related to visual attention and decision-making (Vater et al., 2016).

Applications in Other Domains

Visual search tasks are not only important in the field of clinical psychology, but they also extend to various other fields. Given below are some examples of how VST has been utilized across different domains:

- Neuroscience: Visual search tasks have been extensively utilized to explore the functional interactions within the brain. In a study that utilized VST along with neuroimaging devices such as fMRI and myopia-correcting lenses, researchers were able to decode brain networks related to visual fatigue and cognitive workload (Ryu et al., 2024).

- Neurophysiology: Visual search tasks also have significant implications in neurophysiology. A research examined ocular tracking utilizing eye tracking technology revealed that the visual identification of speed and direction of a moving target is tightly coupled with motor selection (Souto & Kerzel, 2021). It is also interesting to note that eye movement metrics are useful for identifying visual search impairments

- Law Enforcement: Visual search tasks such as the Cambridge Face Memory Test (CFMT+) (measures an individual’s ability to recognize faces), have been widely utilized in law enforcement to predict individual differences in police officers' performance when searching for unfamiliar faces in real-world scenarios (Thielgen et al., 2021).

- Human-Computer Interaction: Visual search tasks have important applications in human-computer interaction. They help in understanding how users visually scan and interact with displays and this further aids in designing interfaces that align with users’ visual capabilities and limitations (Halverson & Hornof, 2007).

- Marketing: In the field of marketing, visual search tasks are used to understand how consumers visually interact with various product displays. These provide insights on how to design products that effectively capture the attention of individuals and thereby influence consumer decision making (Banović et al., 2014; Qin et al., 2014).

- Aviation: In the domain of aviation security, visual search tasks aid in understanding how different factors such as time pressure and target expectancy could influence detection performance, response time, and visual search. These could further help in improving safety-critical tasks in airport security.

Overall, the visual search task and its variations such as the conjunction search task offer many insights into cognitive psychology and have the potential to be connected to many real world applications.

Conclusion

Visual search tasks help us better understand the cognitive psychology behind how people focus, find, and process information in their environment. They reveal a lot about how our attention and decision-making work in everyday life. As technology grows, so do the ways we can use visual search tasks to solve real-world problems. This makes it a valuable tool not just for researchers, but also for creating a better and more efficient world for everyone!

References

Adam, K., & Serences, J. (2022). Interactions of sustained attention and visual search. Journal of Vision, 22(14), 4355. https://doi.org/10.1167/jov.22.14.4355 Banović, Marija & Rosa, Pedro Joel & Gamito, Pedro. (2014). Eye of the Beholder: Visual Search, Attention and Product Choice.

Canu, D., Ioannou, C., Müller, K., Martin, B., Fleischhaker, C., Biscaldi, M., Beauducel, A., Smyrnis, N., van Elst, L. T., & Klein, C. (2021). Visual search in neurodevelopmental disorders: Evidence towards a continuum of impairment. European Child & Adolescent Psychiatry, 31(8), 1–18. https://doi.org/10.1007/s00787-021-01756-z

Chesham, A., Gerber, S. M., Schütz, N., Saner, H., Gutbrod, K., Müri, R. M., Nef, T., & Urwyler, P. (2019a). Search and match task: Development of a taskified match-3 puzzle game to assess and Practice Visual Search. JMIR Serious Games, 7(2). https://doi.org/10.2196/13620

Chesham, A., Gerber, S. M., Schütz, N., Saner, H., Gutbrod, K., Müri, R. M., Nef, T., & Urwyler, P. (2019b). Search and match task: Development of a taskified match-3 puzzle game to assess and Practice Visual Search. JMIR Serious Games, 7(2). https://doi.org/10.2196/13620

Davis, E. T., Shikano, T., Peterson, S. A., & Keyes Michel, R. (2003). Divided attention and visual search for simple versus complex features. Vision Research, 43(21), 2213–2232. https://doi.org/10.1016/s0042-6989(03)00339-0

Friedman, G. N., Johnson, L., & Williams, Z. M. (2018). Long-term visual memory and its role in learning suppression. Frontiers in Psychology, 9. https://doi.org/10.3389/fpsyg.2018.01896

Halverson, T., & Hornof, A. J. (2007). A minimal model for predicting visual search in human-computer interaction. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 431–434. https://doi.org/10.1145/1240624.1240693

Hokken, M. J., Krabbendam, E., van der Zee, Y. J., & Kooiker, M. J. (2022). Visual selective attention and visual search performance in children with CVI, ADHD, and dyslexia: A scoping review. Child Neuropsychology, 29(3), 357–390. https://doi.org/10.1080/09297049.2022.2057940

Lee, J., & Han, S. W. (2020). Visual search proceeds concurrently during the attentional blink and Response Selection Bottleneck. Attention, Perception, & Psychophysics, 82(6), 2893–2908. https://doi.org/10.3758/s13414-020-02047-6

Lin, W., & Qian, J. (2023). Priming effect of individual similarity and ensemble perception in Visual Search and working memory. Psychological Research, 88(3), 719–734. https://doi.org/10.1007/s00426-023-01902-z

Maljkovic, V., & Martini, P. (2005). Implicit short-term memory and event frequency effects in visual search. Vision Research, 45(21), 2831–2846. https://doi.org/10.1016/j.visres.2005.05.019

Mason, D. J., Humphreys, G. W., & Kent, L. S. (2003). Exploring selective attention in ADHD: Visual search through space and Time. Journal of Child Psychology and Psychiatry, 44(8), 1158–1176. https://doi.org/10.1111/1469-7610.00204

Nachtnebel, S. J., Cambronero-Delgadillo, A. J., Helmers, L., Ischebeck, A., & Höfler, M. (2023). The impact of different distractions on outdoor visual search and Object Memory. Scientific Reports, 13(1). https://doi.org/10.1038/s41598-023-43679-6

Nasiri, E., Khalilzad, M., Hakimzadeh, Z., Isari, A., Faryabi-Yousefabad, S., Sadigh-Eteghad, S., & Naseri, A. (2023). A comprehensive review of attention tests: Can we assess what we exactly do not understand? The Egyptian Journal of Neurology, Psychiatry and Neurosurgery, 59(1). https://doi.org/10.1186/s41983-023-00628-4

Pereira, M. L., Camargo, M. von, Bellan, A. F., Tahira, A. C., dos Santos, B., dos Santos, J., Machado-Lima, A., Nunes, F. L. S., & Forlenza, O. V. (2020). Visual search efficiency in mild cognitive impairment and alzheimer’s disease: An eye movement study. Journal of Alzheimer’s Disease, 75(1), 261–275. https://doi.org/10.3233/jad-190690

Qin, X. A., Koutstaal, W., & Engel, S. A. (2014a). The hard-won benefits of familiarity in visual search: Naturally familiar brand logos are found faster. Attention, Perception & Psychophysics, 76(4), 914–930. https://doi.org/10.3758/s13414-014-0623-5

Qin, X. A., Koutstaal, W., & Engel, S. A. (2014b). The hard-won benefits of familiarity in visual search: Naturally familiar brand logos are found faster. Attention, Perception, & Psychophysics, 76(4), 914–930. https://doi.org/10.3758/s13414-014-0623-5

Radhakrishnan, A., Balakrishnan, M., Behera, S., & Raghunandhan, R. (2022). Role of reading medium and audio distractors on visual search. Journal of Optometry, 15(4), 299–304. https://doi.org/10.1016/j.optom.2021.12.004

Redden, R. S., Eady, K., Klein, R. M., & Saint-Aubin, J. (2022). Individual differences in working memory capacity and visual search while reading. Memory & Cognition, 51(2), 321–335. https://doi.org/10.3758/s13421-022-01357-4 Rorden, C., & Karnath, H.-O. (2010). A simple measure of neglect severity. Neuropsychologia, 48(9), 2758–2763. https://doi.org/10.1016/j.neuropsychologia.2010.04.018

Ryu, H., Ju, U., & Wallraven, C. (2024a). Decoding visual fatigue in a visual search task selectively manipulated via myopia-correcting lenses. Frontiers in Neuroscience, 18. https://doi.org/10.3389/fnins.2024.1307688

Ryu, H., Ju, U., & Wallraven, C. (2024b). Decoding visual fatigue in a visual search task selectively manipulated via myopia-correcting lenses. Frontiers in Neuroscience, 18. https://doi.org/10.3389/fnins.2024.1307688

Sauter, M., Stefani, M., & Mack, W. (2020). Towards interactive search: Investigating visual search in a novel real-world paradigm. Brain Sciences, 10(12), 927. https://doi.org/10.3390/brainsci10120927

Souto, D., & Kerzel, D. (2021). Visual selective attention and the control of tracking eye movements: A critical review. Journal of Neurophysiology, 125(5), 1552–1576. https://doi.org/10.1152/jn.00145.2019

Thielgen, M. M., Schade, S., & Bosé, C. (2021). Face processing in Police Service: The relationship between laboratory-based assessment of face processing abilities and performance in a real-world identity matching task. Cognitive Research: Principles and Implications, 6(1). https://doi.org/10.1186/s41235-021-00317-x

Vater, C., Roca, A., & Williams, A. M. (2016). Effects of anxiety on anticipation and visual search in dynamic, time-constrained situations. Sport, Exercise, and Performance Psychology, 5(3), 179–192. https://doi.org/10.1037/spy0000056

Wagner, J., Zurlo, A., & Rusconi, E. (2024). Individual differences in visual search: A systematic review of the link between visual search performance and traits or abilities. Cortex, 178, 51–90. https://doi.org/10.1016/j.cortex.2024.05.020

Wickens, C. D. (2023). Pilot attention and perception and spatial cognition. Human Factors in Aviation and Aerospace, 141–170. https://doi.org/10.1016/b978-0-12-420139-2.00009-5

Wolfe, J. M. (2021). Guided search 6.0: An updated model of visual search. Psychonomic Bulletin & Review, 28(4), 1060–1092. https://doi.org/10.3758/s13423-020-01859-9