OpenAI Trigger

Overview

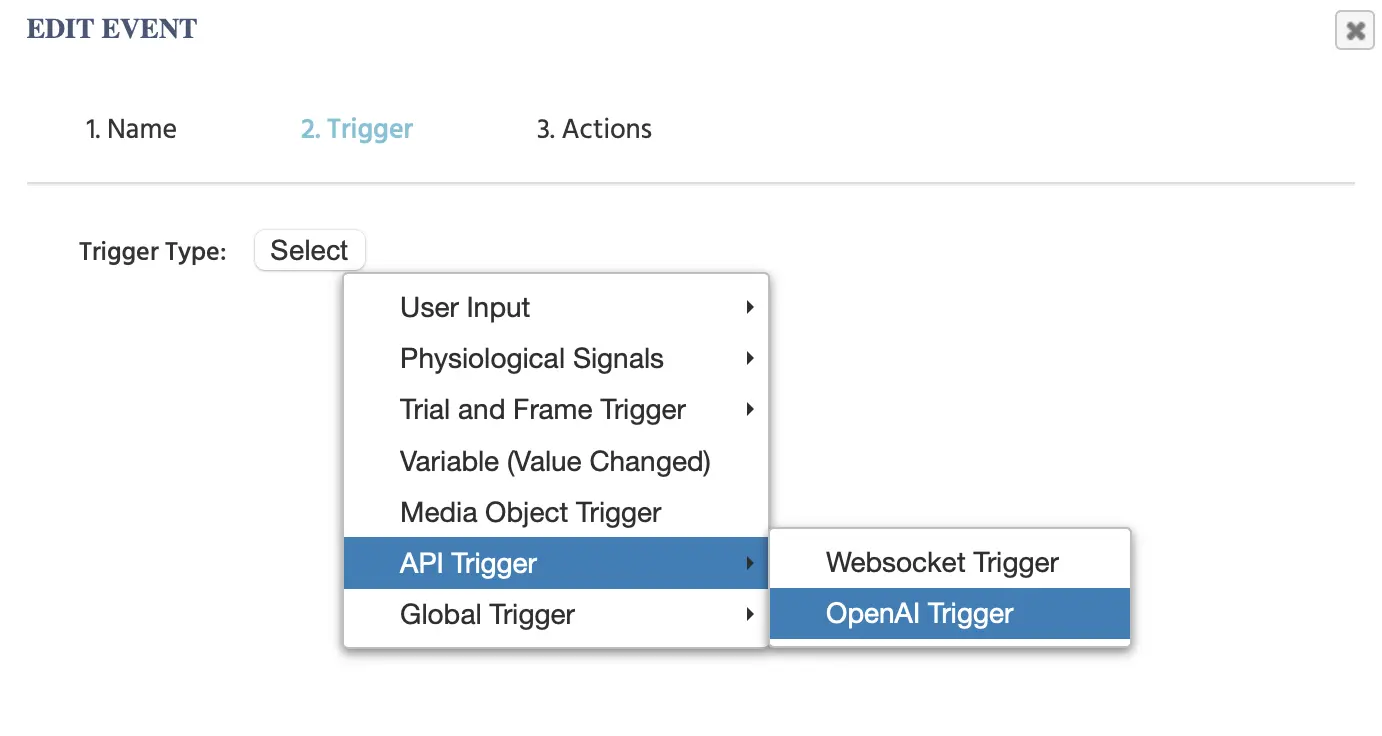

The OpenAI Trigger (listed under API Triggers in the main menu) can be used to initiate an action based on incoming information from OpenAI. Using this trigger, you can specify what kind of incoming information is to be used as a trigger, such as text-based, image or audio-based, via selection of one of the different Model Types listed.

NOTE: For this option to be available, you have to first list your API Key under in the Settings tab.

Selecting this option will lead to the following parameters being displayed:

Model Types Available

Using the Model Type drop-down, the following options are available:

| Model Type | Description |

|---|---|

ChatGPT | Incoming text-based responses from OpenAI |

Image Generation | Incoming image-based responses |

Generate Audio | Incoming audio-based responses |

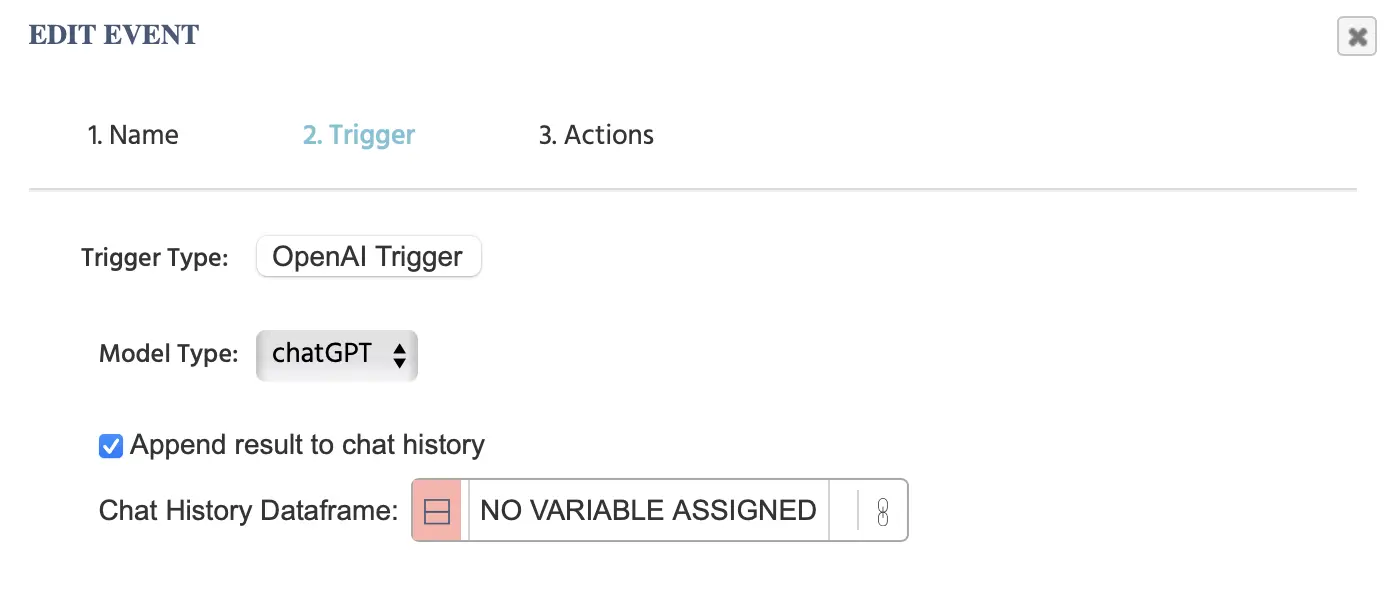

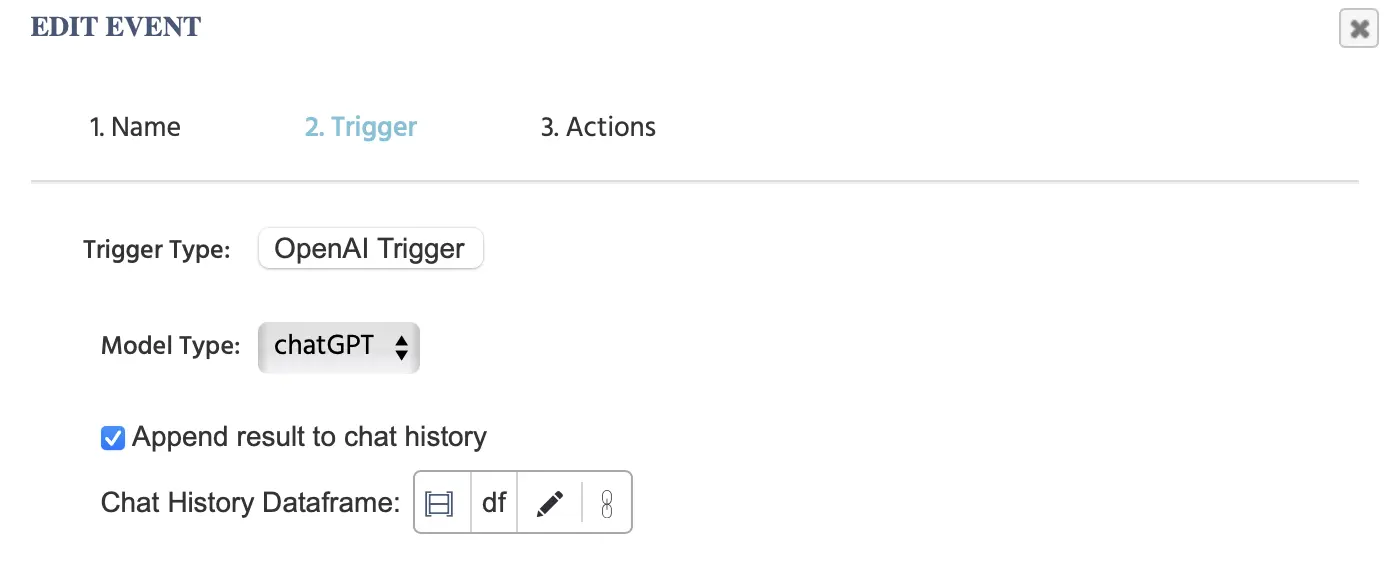

ChatGPT Model Type

In the example below, the data frame that was assigned is called ‘df’ and the result from the ‘OpenAI Trigger’ will be appended to this data frame. This data frame needs to have two columns. The first column will denote the ‘role’ and the second column the ‘chat message.’ The values from the action will be automatically appended to the data frame that is linked here.

NOTE 1: If you are also using the ‘Send to OpenAI’ action, then you need to utilize the same data frame there as you have indicated here.

NOTE 2: Also refer to this walkthrough where we build a study step-by-step, integrating ChatGPT for a text-to-text-based study with a chat and utilizing these trigger options.

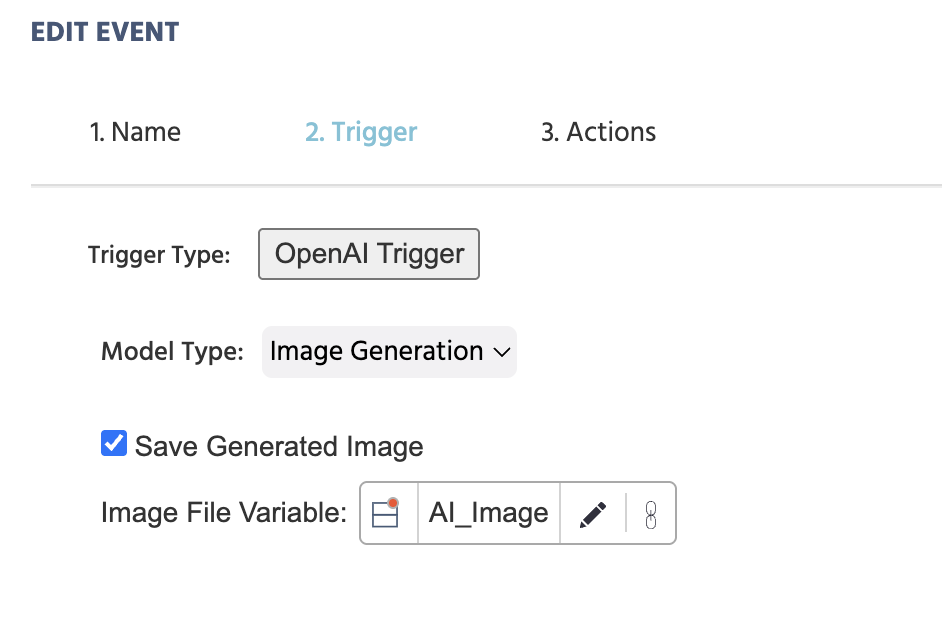

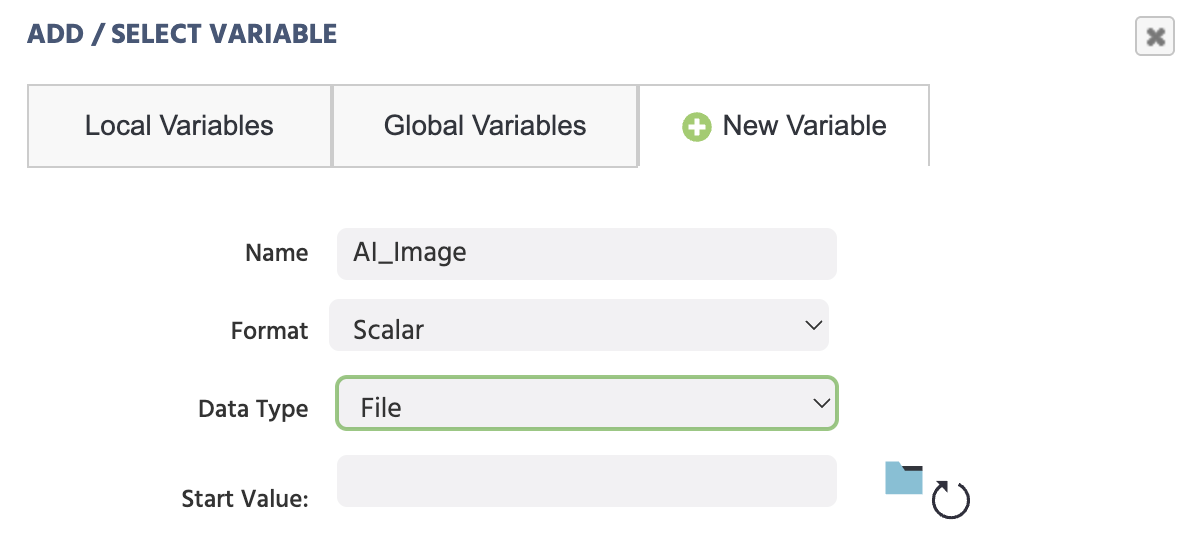

Image Generation - Model Type

With this option, you can indicate a generated image to be saved and also specify in which Variable it should be saved in.

NOTE: When assigning and creating the particular variable here, remember to indicate the variable type as File, in order for the image file to be stored in there.

Useful Demo: Check out this demo that makes use of image generation via the OpenAI Trigger and action. The participant is asked to input a prompt and this prompt is then used to generate an image.

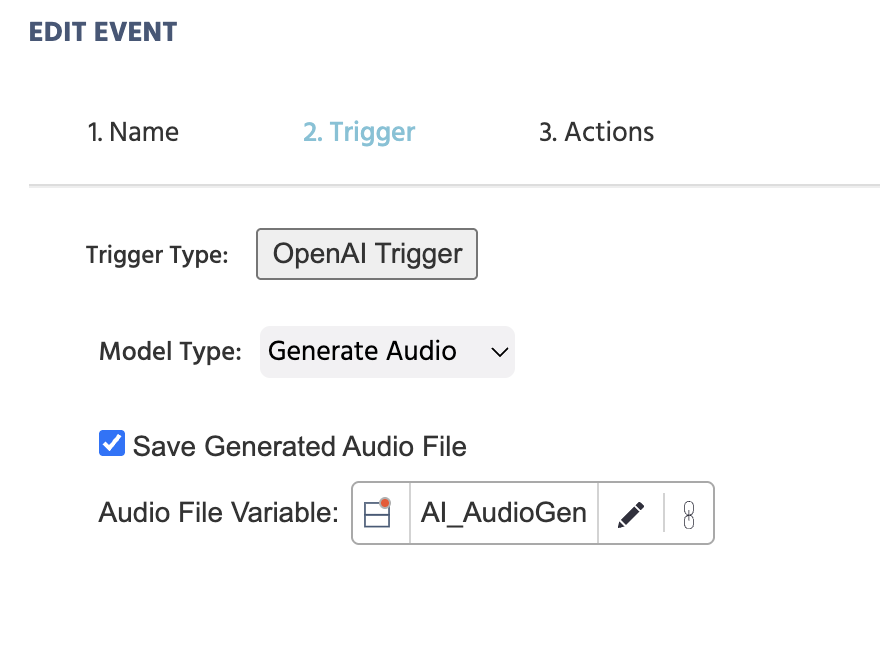

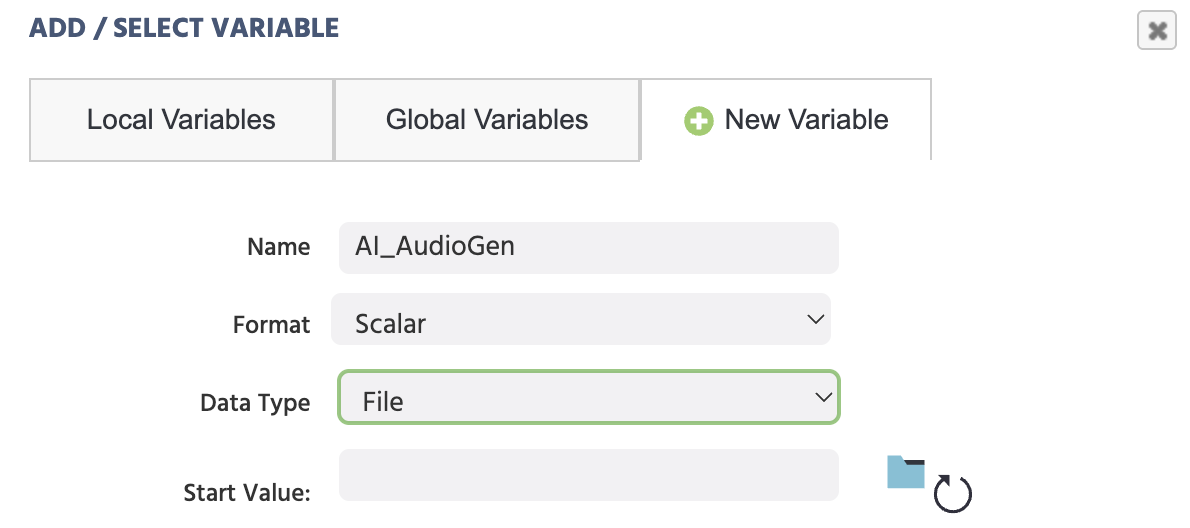

Generate Audio - Model Type

With this option, you can indicate a generated audio file to be saved and also specify in which Variable it should be saved in.

NOTE: When assigning and creating the particular variable here, remember to choose the variable type as File, in order for the audio file to be stored in there.

Useful Actions

Note: After selecting the OpenAI trigger in the event system, you have the option of utilizing and referencing trigger-specific OpenAI values across various actions with the value-select menu.